Cleaning Download Folder

One of the messiest things in this world is the download folder of a developer. When writing a blog, working on a project, something similar we just download images and save them with ugly and funny names like asdfg.jpg. This python script will clean your download folder by renaming and deleting certain files based on some condition. Libraries:- OS import os folder_location = 'C:\\Users\\user\\Downloads\\demo' os.chdir(folder_location) list_of_files = os.listdir() ## Selecting All Images images = [content for content in list_of_files if content.endswith(('.png','.jpg','.jpeg'))] for index, image in enumerate(images): os.rename(image,f'{index}.png') ## Deleting All Images ################## Write Your Script Here ######## Try To Create Your Own CodeSending Text Messages

There are many free text message services available on the internet like Twillo, fast2sms, etc. Fast2sms provide 50 free messages with a prebuild template to connect your script with their API. This script will let us send text SMS to any number directly through our command-line interface. import requests import json def send_sms(number, message): url = 'https://www.fast2sms.com/dev/bulk' params = { 'authorization': 'FIND_YOUR_OWN', 'sender_id': 'FSTSMS', 'message': message, 'language': 'english', 'route': 'p', 'numbers': number } response = requests.get(url, params=params) dic = response.json() #print(dic) return dic.get('return') num = int(input("Enter The Number:\n")) msg = input("Enter The Message You Want To Send:\n") s = send_sms(num, msg) if s: print("Successfully sent") else: print("Something went wrong..")Converting hours to seconds

When working on projects that require you to convert hours into seconds, you can use the following Python script.def convert(seconds):

seconds = seconds % (24 * 3600)

hour = seconds // 3600

seconds %= 3600

minutes = seconds // 60

seconds %= 60

return "%d:%02d:%02d" % (hour, minutes, seconds)

# Driver program

n = 12345

print(convert(n))

Raising a number to the power

Another popular Python script calculates the power of a number. For example, 2 to the power of 4. Here, there are at least three methods to choose from. You can use the math.pow(), pow(), or **. Here is the script.import math

# Assign values to x and n

x = 4

n = 3

# Method 1

power = x ** n

print("%d to the power %d is %d" % (x,n,power))

# Method 2

power = pow(x,n)

print("%d to the power %d is %d" % (x,n,power))

# Method 3

power = math.pow(2,6.5)

print("%d to the power %d is %5.2f" % (x,n,power))

If/else statement

This is arguably one of the most used statements in Python. It allows your code to execute a function if a certain condition is met. Unlike other languages, you don’t need to use curly braces. Here is a simple if/else script.# Assign a value

number = 50

# Check the is more than 50 or not

if (number >= 50):

print("You have passed")

else:

print("You have not passed")

Convert images to JPEG

The most conventional systems rarely accept image formats such as PNG. As such, you’ll be required to convert them into JPEG files. Luckily, there’s a Python script that allows you to automate this process.import os

import sys

from PIL import Image

if len(sys.argv) > 1:

if os.path.exists(sys.argv[1]):

im = Image.open(sys.argv[1])

target_name = sys.argv[1] + ".jpg"

rgb_im = im.convert('RGB')

rgb_im.save(target_name)

print("Saved as " + target_name)

else:

print(sys.argv[1] + " not found")

else:

print("Usage: convert2jpg.py <file>")

Download Google images

If you are working on a project that demands many images, there’s a Python script that enables you to do so. With it, you can download hundreds of images simultaneously. However, you should avoid violating copyright terms. Click here for more information.Read battery level of Bluetooth device

This script allows you to read the battery level of your Bluetooth headset. This is especially crucial if the level does not display on your PC. However, it does not support all Bluetooth headsets. For it to run, you need to have Docker on your system. Click here for more information.Delete Telegram messages

Let’s face it, messaging apps do chew up much of your device’s storage space. And Telegram is no different. Luckily, this script allows you to delete all supergroups messages. You need to enter the supergroup’s information for the script to run. Click here for more information.Get song lyrics

This is yet another popular Python script that enables you to scrape lyrics from the Genius site. It primarily works with Spotify, however, other media players with DBus MediaPlayer2 can also use the script. With it, you can sing along to your favorite song. Click here for more information.Heroku hosting

Heroku is one of the most preferred hosting services. Used by thousands of developers, it allows you to build apps for free. Likewise, you can host your Python applications and scripts on Heroku with this script. Click here for more information.Github activity

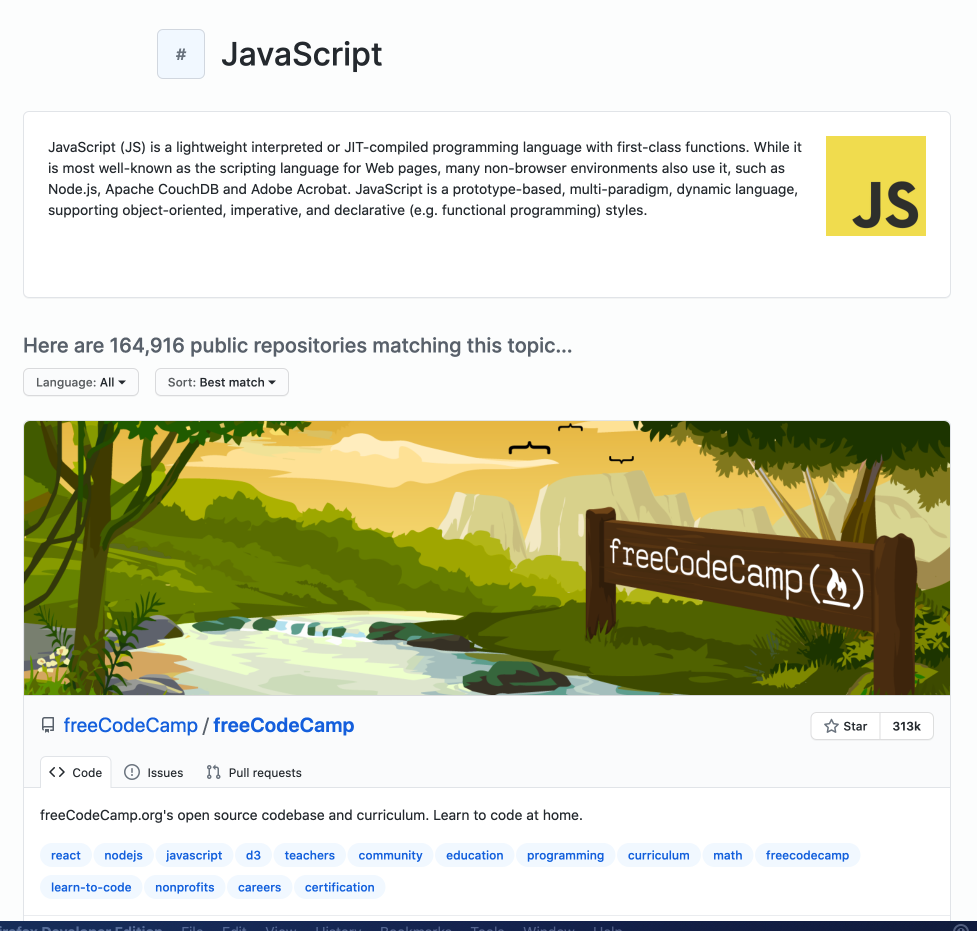

If you contribute to open source projects, keeping a record of your contributions is recommended. Not only do you track your contributions, but also appear professional when displaying your work to other people. With this script, you can generate a robust activity graph. Click here for information.Removing duplicate code

When creating large apps or working on projects, it is normal to have duplicates in your list. This not only makes coding strenuous, but also makes your code appear unprofessional. With this script, you can remove duplicates seamlessly.Sending emails

Emails are crucial to any businesses’ communication avenues. With Python, you can enable sites and web apps to send them without hiccups. However, businesses do not want to send each email manually, instead, they prefer to automate the process. This script allows you to choose which emails to reply to.Find specific files on your system

Often, you forget the names or location of files on your system. This is not only annoying but also consumes time navigating through different folders. While there are programs that help you search for files, you need one that can automate the process. Luckily, this script enables you to choose which files and file types to search for. For example, if want to search for MP3 files, you can use this script.import fnmatch

import os

rootPath = '/'

pattern = '*.mp3'

for root, dirs, files in os.walk(rootPath):

for filename in fnmatch.filter(files, pattern):

print( os.path.join(root, filename))

Generating random passwords

Passwords bolster the privacy of app and website users. Besides, they prevent fraudulent use of accounts by cyber criminals. As such, you need to create an app or website that can generate random strong passwords. With this script, you can seamlessly generate them.import string

from random import *

characters = string.ascii_letters + string.punctuation + string.digits

password = "".join(choice(characters) for x in range(randint(8, 16)))

print (password)

Print odd numbers

Some projects may require you to print odd numbers within a specific range. While you can do this manually, it is time-consuming and prone to error. This means you need a program that can automate the process. Thanks to this script, you can achieve this.Get date value

Python allows you to format a date value in numerous ways. With the DateTime module, this script allows you to read the current date and set a custom value.Removing items from a list

You’ll often have to modify lists on your projects. Python enables you to do this using the Insert() and remove() methods. Here is a script you can use to achieve this.# Declare a fruit list

fruits = ["Mango","Orange","Guava","Banana"]

# Insert an item in the 2nd position

fruits.insert(1, "Grape")

# Displaying list after inserting

print("The fruit list after insert:")

print(fruits)

# Remove an item

fruits.remove("Guava")

# Print the list after delete

print("The fruit list after delete:")

print(fruits)

Count list items

Using the count() method, you can print how many times a string appears in another string. You need to provide the string that Python will search. Here is a script to help you do so.# Define the string

string = 'Python Bash Java PHP PHP PERL'

# Define the search string

search = 'P'

# Store the count value

count = string.count(search)

# Print the formatted output

print("%s appears %d times" % (search, count))

Text grabber

With this Python script, you can take a screenshot and copy the text in it. Click here for more information.Tweet search

Ever searched for a tweet to no avail? Annoying, right! Well, why not use this script and let it do the legwork for you.反爬虫代码 直接炸了爬虫服务器

很多人的爬虫是使用Requests来写的,如果你阅读过Requests的文档,那么你可能在文档中的Binary Response Content[1]这一小节,看到这样一句话: The gzip and deflate transfer-encodings are automatically decoded for you. (Request)会自动为你把gzip和deflate转码后的数据进行解码网站服务器可能会使用gzip压缩一些大资源,这些资源在网络上传输的时候,是压缩后的二进制格式。 客户端收到返回以后,如果发现返回的Headers里面有一个字段叫做Content-Encoding,其中的值包含gzip,那么客户端就会先使用gzip对数据进行解压,解压完成以后再把它呈现到客户端上面。

浏览器自动就会做这个事情,用户是感知不到这个事情发生的。

而requests、Scrapy这种网络请求库或者爬虫框架,也会帮你做这个事情,因此你不需要手动对网站返回的数据解压缩。

这个功能原本是一个方便开发者的功能,但我们可以利用这个功能来做报复爬虫的事情。

我们首先写一个客户端,来测试一下返回gzip压缩数据的方法。

我首先在硬盘上创建一个文本文件text.txt,里面有两行内容,如下图所示:

.gz文件:

cattext.txt|gzip>data.gz

接下来,我们使用FastAPI写一个HTTP服务器server.py:

from fastapi import FastAPI, Response

from fastapi.responses import FileResponse

app = FastAPI()

@app.get('/')

def index():

resp = FileResponse('data.gz')

return resp

然后使用命令uvicorn server:app启动这个服务。

接下来,我们使用requests来请求这个接口,会发现返回的数据是乱码,如下图所示:

server.py的代码,通过Headers告诉客户端,这个数据是经过gzip压缩的:

from fastapi import FastAPI, Response

from fastapi.responses import FileResponse

app = FastAPI()

@app.get('/')

def index():

resp = FileResponse('data.gz')

resp.headers['Content-Encoding'] = 'gzip' # 说明这是gzip压缩的数据

return resp

修改以后,重新启动服务器,再次使用requests请求,发现已经可以正常显示数据了:

111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111我们可以用5个字符来表示:

192个1。

这就相当于把192个字符压缩成了5个字符,压缩率高达97.4%。

如果我们可以把一个1GB的文件压缩成1MB,那么对服务器来说,仅仅是返回了1MB的二进制数据,不会造成任何影响。

但是对客户端或者爬虫来说,它拿到这个1MB的数据以后,就会在内存中把它还原成1GB的内容。

这样一瞬间爬虫占用的内存就增大了1GB。

如果我们再进一步增大这个原始数据,那么很容易就可以把爬虫所在的服务器内存全部沾满,轻者服务器直接杀死爬虫进程,重则爬虫服务器直接死机。

你别以为这个压缩比听起来很夸张,其实我们使用很简单的一行命令就可以生成这样的压缩文件。

如果你用的是Linux,那么请执行命令:

dd if=/dev/zero bs=1M count=1000 | gzip > boom.gz

如果你的电脑是macOS,那么请执行命令:

dd if=/dev/zero bs=1048576 count=1000 | gzip > boom.gz

执行过程如下图所示:

boom.gz文件只有995KB。

但是如果我们使用gzip -d boom.gz对这个文件解压缩,就会发现生成了一个1GB的boom文件,如下图所示:

count=1000改成一个更大的数字,就能得到更大的文件。

我现在把count改成10,给大家做一个演示(不敢用1GB的数据来做测试,害怕我的Jupyter崩溃)。

生成的boom.gz文件只有10KB:

resp这个对象占用的内存大小:

Fancier Output Formatting

https://docs.python.org/3/tutorial/ So far we’ve encountered two ways of writing values: expression statements and theprint() function.

(A third way is using the write() method of file objects; the standard output file can be referenced as sys.stdout.

See the Library Reference for more information on this.)

Often you’ll want more control over the formatting of your output than simply printing space-separated values.

There are several ways to format output.

To use formatted string literals, begin a string with f or F before the opening quotation mark or triple quotation mark.

Inside this string, you can write a Python expression between { and }

characters that can refer to variables or literal values.

>>> year = 2016

>>> event = "Referendum"

>>> f"Results of the {year} {event}"

"Results of the 2016 Referendum"

The str.format() method of strings requires more manual effort.

You’ll still use { and } to mark where a variable will be substituted and can provide detailed formatting directives,

but you’ll also need to provide the information to be formatted.

>>> yes_votes = 42_572_654

>>> no_votes = 43_132_495

>>> percentage = yes_votes / (yes_votes + no_votes)

>>> "{:-9} YES votes {:2.2%}".format(yes_votes, percentage)

" 42572654 YES votes 49.67%"

Finally, you can do all the string handling yourself by using string slicing and concatenation operations to create any layout you can imagine.

The string type has some methods that perform useful operations for padding strings to a given column width.

When you don’t need fancy output but just want a quick display of some variables for debugging purposes, you can convert any value to a string with the repr() or str() functions.

The str() function is meant to return representations of values which are fairly human-readable, while repr() is meant to generate representations which can be read by the interpreter (or will force a SyntaxError if there is no equivalent syntax).

For objects which don’t have a particular representation for human consumption, str() will return the same value as

repr().

Many values, such as numbers or structures like lists and dictionaries, have the same representation using either function.

Strings, in particular, have two distinct representations.

Some examples:

>>> s = "Hello, world."

>>> str(s)

"Hello, world."

>>> repr(s)

""Hello, world.""

>>> str(1/7)

"0.14285714285714285"

>>> x = 10 * 3.25

>>> y = 200 * 200

>>> s = "The value of x is " + repr(x) + ", and y is " + repr(y) + "..."

>>> print(s)

The value of x is 32.5, and y is 40000...

>>> # The repr() of a string adds string quotes and backslashes:

...

hello = "hello, world\n"

>>> hellos = repr(hello)

>>> print(hellos)

"hello, world\n"

>>> # The argument to repr() may be any Python object:

...

repr((x, y, ("spam", "eggs")))

"(32.5, 40000, ("spam", "eggs"))"

The string module contains a Template class that offers yet another way to substitute values into strings, using placeholders like

$x and replacing them with values from a dictionary, but offers much less control of the formatting.

7.1.1. Formatted String Literals

Formatted string literals (also called f-strings for short) let you include the value of Python expressions inside a string by prefixing the string with f or F and writing expressions as

{expression}.

An optional format specifier can follow the expression.

This allows greater control over how the value is formatted.

The following example rounds pi to three places after the decimal:

>>> import math

>>> print(f"The value of pi is approximately {math.pi:.3f}.")

The value of pi is approximately 3.142.

Passing an integer after the ':' will cause that field to be a minimum number of characters wide.

This is useful for making columns line up.

>>> table = {"Sjoerd": 4127, "Jack": 4098, "Dcab": 7678}

>>> for name, phone in table.items():

...

print(f"{name:10} ==> {phone:10d}")

...

Sjoerd ==> 4127

Jack ==> 4098

Dcab ==> 7678

Other modifiers can be used to convert the value before it is formatted.

'!a' applies ascii(), '!s' applies str(), and '!r'

applies repr():

>>> animals = "eels"

>>> print(f"My hovercraft is full of {animals}.")

My hovercraft is full of eels.

>>> print(f"My hovercraft is full of {animals!r}.")

My hovercraft is full of "eels".

The = specifier can be used to expand an expression to the text of the expression, an equal sign, then the representation of the evaluated expression:

>>> bugs = "roaches"

>>> count = 13

>>> area = "living room"

>>> print(f"Debugging {bugs=} {count=} {area=}")

Debugging bugs="roaches" count=13 area="living room"

See self-documenting expressions for more information on the = specifier.

For a reference on these format specifications, see the reference guide for the Format Specification Mini-Language.

7.1.2. The String format() Method

Basic usage of the str.format() method looks like this:

>>> print("We are the {} who say "{}!"".format("knights", "Ni"))

We are the knights who say "Ni!"

The brackets and characters within them (called format fields) are replaced with the objects passed into the str.format() method.

A number in the brackets can be used to refer to the position of the object passed into the

str.format() method.

>>> print("{0} and {1}".format("spam", "eggs"))

spam and eggs

>>> print("{1} and {0}".format("spam", "eggs"))

eggs and spam If keyword arguments are used in the str.format() method, their values are referred to by using the name of the argument.

>>> print("This {food} is {adjective}.".format(

...

food="spam", adjective="absolutely horrible"))

This spam is absolutely horrible.

Positional and keyword arguments can be arbitrarily combined:

>>> print("The story of {0}, {1}, and {other}.".format("Bill", "Manfred",

...

other="Georg"))

The story of Bill, Manfred, and Georg.

If you have a really long format string that you don’t want to split up, it would be nice if you could reference the variables to be formatted by name instead of by position.

This can be done by simply passing the dict and using square brackets '[]' to access the keys.

>>> table = {"Sjoerd": 4127, "Jack": 4098, "Dcab": 8637678}

>>> print("Jack: {0[Jack]:d}; Sjoerd: {0[Sjoerd]:d}; "

...

"Dcab: {0[Dcab]:d}".format(table))

Jack: 4098; Sjoerd: 4127; Dcab: 8637678

This could also be done by passing the table dictionary as keyword arguments with the **

notation.

>>> table = {"Sjoerd": 4127, "Jack": 4098, "Dcab": 8637678}

>>> print("Jack: {Jack:d}; Sjoerd: {Sjoerd:d}; Dcab: {Dcab:d}".format(**table))

Jack: 4098; Sjoerd: 4127; Dcab: 8637678

This is particularly useful in combination with the built-in function

vars(), which returns a dictionary containing all local variables.

As an example, the following lines produce a tidily aligned set of columns giving integers and their squares and cubes:

>>> for x in range(1, 11):

...

print("{0:2d} {1:3d} {2:4d}".format(x, x*x, x*x*x))

...

1 1 1

2 4 8

3 9 27

4 16 64

5 25 125

6 36 216

7 49 343

8 64 512

9 81 729

10 100 1000

For a complete overview of string formatting with str.format(), see Format String Syntax.

7.1.3. Manual String Formatting

Here’s the same table of squares and cubes, formatted manually:

>>> for x in range(1, 11):

...

print(repr(x).rjust(2), repr(x*x).rjust(3), end=" ")

...

# Note use of "end" on previous line

...

print(repr(x*x*x).rjust(4))

...

1 1 1

2 4 8

3 9 27

4 16 64

5 25 125

6 36 216

7 49 343

8 64 512

9 81 729

10 100 1000

(Note that the one space between each column was added by the way print() works: it always adds spaces between its arguments.)

The str.rjust() method of string objects right-justifies a string in a field of a given width by padding it with spaces on the left.

There are similar methods str.ljust() and str.center().

These methods do not write anything, they just return a new string.

If the input string is too long, they don’t truncate it, but return it unchanged; this will mess up your column lay-out but that’s usually better than the alternative, which would be lying about a value.

(If you really want truncation you can always add a slice operation, as in x.ljust(n)[:n].)

There is another method, str.zfill(), which pads a numeric string on the left with zeros.

It understands about plus and minus signs:

>>> "12".zfill(5)

"00012"

>>> "-3.14".zfill(7)

"-003.14"

>>> "3.14159265359".zfill(5)

"3.14159265359"

7.1.4. Old string formatting

The % operator (modulo) can also be used for string formatting.

Given 'string'

% values, instances of % in string are replaced with zero or more elements of values.

This operation is commonly known as string interpolation.

For example:

>>> import math

>>> print("The value of pi is approximately %5.3f." % math.pi)

The value of pi is approximately 3.142.

More information can be found in the printf-style String Formatting section.

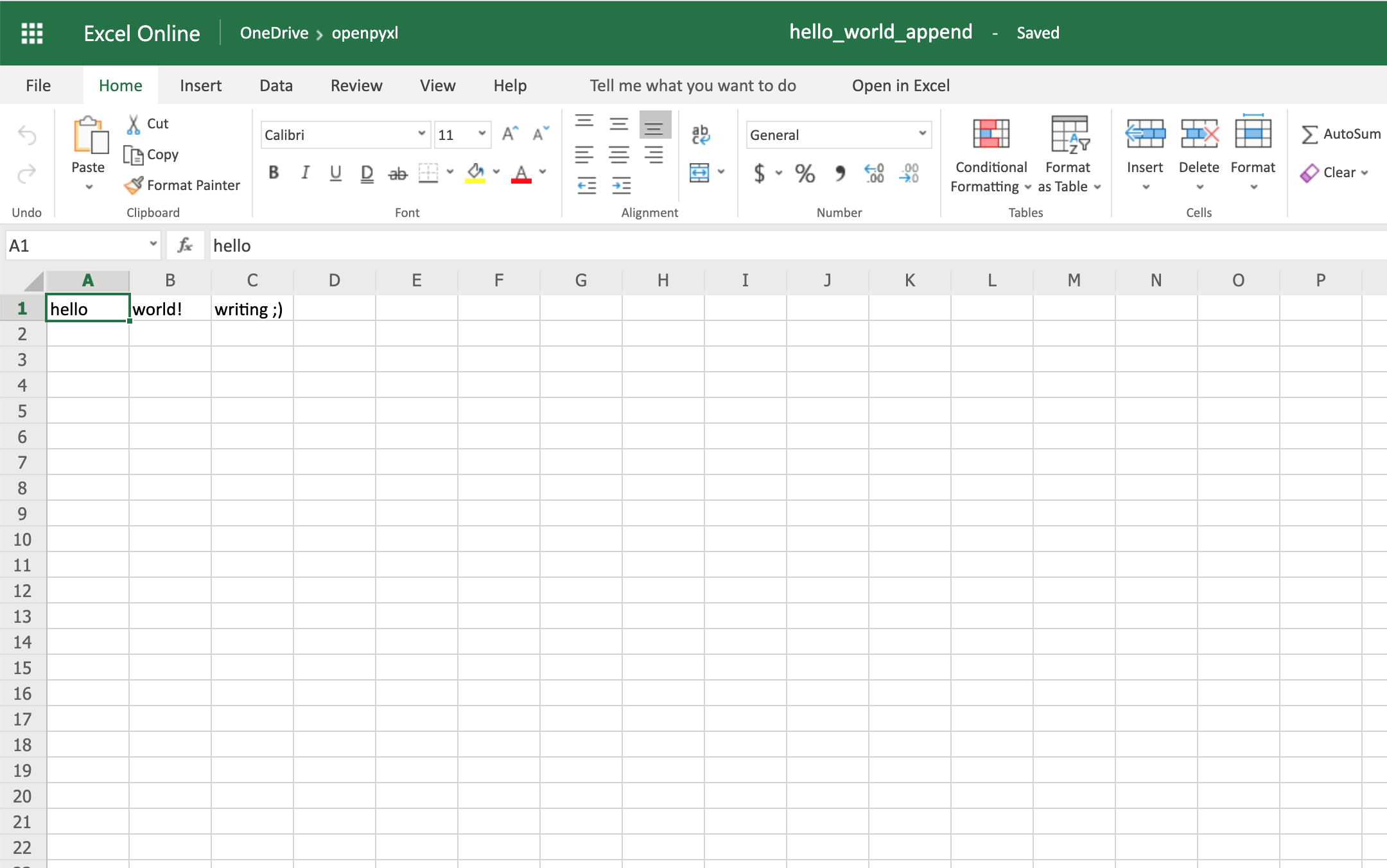

Reading and Writing Files

open() returns a file object, and is most commonly used with two positional arguments and one keyword argument:

open(filename, mode, encoding=None)

>>> f = open("workfile", "w", encoding="utf-8")

The first argument is a string containing the filename.

The second argument is another string containing a few characters describing the way in which the file will be used.

mode can be 'r' when the file will only be read, 'w' for only writing (an existing file with the same name will be erased), and 'a' opens the file for appending; any data written to the file is automatically added to the end.

'r+' opens the file for both reading and writing.

The mode argument is optional; 'r' will be assumed if it’s omitted.

Normally, files are opened in text mode, that means, you read and write strings from and to the file, which are encoded in a specific encoding.

If encoding is not specified, the default is platform dependent (see open()).

Because UTF-8 is the modern de-facto standard, encoding="utf-8" is recommended unless you know that you need to use a different encoding.

Appending a 'b' to the mode opens the file in binary mode.

Binary mode data is read and written as bytes objects.

You can not specify encoding when opening file in binary mode.

In text mode, the default when reading is to convert platform-specific line endings (\n on Unix, \r\n on Windows) to just \n.

When writing in text mode, the default is to convert occurrences of \n back to platform-specific line endings.

This behind-the-scenes modification to file data is fine for text files, but will corrupt binary data like that in

JPEG or EXE files.

Be very careful to use binary mode when reading and writing such files.

It is good practice to use the with keyword when dealing with file objects.

The advantage is that the file is properly closed after its suite finishes, even if an exception is raised at some point.

Using with is also much shorter than writing equivalent try-finally blocks:

>>> with open("workfile", encoding="utf-8") as f:

...

read_data = f.read()

>>> # We can check that the file has been automatically closed.

>>> f.closed True If you’re not using the with keyword, then you should call f.close() to close the file and immediately free up any system resources used by it.

Warning Calling f.write() without using the with keyword or calling f.close() might result in the arguments of f.write() not being completely written to the disk, even if the program exits successfully.

After a file object is closed, either by a with statement or by calling f.close(), attempts to use the file object will automatically fail.

>>> f.close()

>>> f.read()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: I/O operation on closed file.

7.2.1. Methods of File Objects

The rest of the examples in this section will assume that a file object called f has already been created.

To read a file’s contents, call f.read(size), which reads some quantity of data and returns it as a string (in text mode) or bytes object (in binary mode).

size is an optional numeric argument.

When size is omitted or negative, the entire contents of the file will be read and returned; it’s your problem if the file is twice as large as your machine’s memory.

Otherwise, at most size characters (in text mode) or size bytes (in binary mode) are read and returned.

If the end of the file has been reached, f.read() will return an empty string ('').

>>> f.read()

"This is the entire file.\n"

>>> f.read()

""

f.readline() reads a single line from the file; a newline character (\n) is left at the end of the string, and is only omitted on the last line of the file if the file doesn’t end in a newline.

This makes the return value unambiguous; if f.readline() returns an empty string, the end of the file has been reached, while a blank line is represented by '\n', a string containing only a single newline.

>>> f.readline()

"This is the first line of the file.\n"

>>> f.readline()

"Second line of the file\n"

>>> f.readline()

""

For reading lines from a file, you can loop over the file object.

This is memory efficient, fast, and leads to simple code:

>>> for line in f:

...

print(line, end=")

...

This is the first line of the file.

Second line of the file If you want to read all the lines of a file in a list you can also use

list(f) or f.readlines().

f.write(string) writes the contents of string to the file, returning the number of characters written.

>>> f.write("This is a test\n")

15

Other types of objects need to be converted – either to a string (in text mode) or a bytes object (in binary mode) – before writing them:

>>> value = ("the answer", 42)

>>> s = str(value) # convert the tuple to string

>>> f.write(s)

18

f.tell() returns an integer giving the file object’s current position in the file represented as number of bytes from the beginning of the file when in binary mode and an opaque number when in text mode.

To change the file object’s position, use f.seek(offset, whence).

The position is computed from adding offset to a reference point; the reference point is selected by the whence argument.

A whence value of 0 measures from the beginning of the file, 1 uses the current file position, and 2 uses the end of the file as the reference point.

whence can be omitted and defaults to 0, using the beginning of the file as the reference point.

>>> f = open("workfile", "rb+")

>>> f.write(b"0123456789abcdef")

16

>>> f.seek(5) # Go to the 6th byte in the file

5

>>> f.read(1)

b"5"

>>> f.seek(-3, 2) # Go to the 3rd byte before the end

13

>>> f.read(1)

b"d"

In text files (those opened without a b in the mode string), only seeks relative to the beginning of the file are allowed (the exception being seeking to the very file end with seek(0, 2)) and the only valid offset values are those returned from the f.tell(), or zero.

Any other offset value produces undefined behaviour.

File objects have some additional methods, such as isatty() and

truncate() which are less frequently used; consult the Library Reference for a complete guide to file objects.

7.2.2. Saving structured data with json

Strings can easily be written to and read from a file.

Numbers take a bit more effort, since the read() method only returns strings, which will have to be passed to a function like int(), which takes a string like '123' and returns its numeric value 123.

When you want to save more complex data types like nested lists and dictionaries, parsing and serializing by hand becomes complicated.

Rather than having users constantly writing and debugging code to save complicated data types to files, Python allows you to use the popular data interchange format called JSON (JavaScript Object Notation).

The standard module called json can take Python data hierarchies, and convert them to string representations; this process is called serializing.

Reconstructing the data from the string representation is called deserializing.

Between serializing and deserializing, the string representing the object may have been stored in a file or data, or sent over a network connection to some distant machine.

Note The JSON format is commonly used by modern applications to allow for data exchange.

Many programmers are already familiar with it, which makes it a good choice for interoperability.

If you have an object x, you can view its JSON string representation with a simple line of code:

>>> import json

>>> x = [1, "simple", "list"]

>>> json.dumps(x)

"[1, "simple", "list"]"

Another variant of the dumps() function, called dump(), simply serializes the object to a text file.

So if f is a text file object opened for writing, we can do this:

json.dump(x, f)

To decode the object again, if f is a binary file or text file object which has been opened for reading:

x = json.load(f)

Note JSON files must be encoded in UTF-8.

Use encoding="utf-8" when opening JSON file as a text file for both of reading and writing.

This simple serialization technique can handle lists and dictionaries, but serializing arbitrary class instances in JSON requires a bit of extra effort.

The reference for the json module contains an explanation of this.

See also

pickle - the pickle module Contrary to JSON, pickle is a protocol which allows the serialization of arbitrarily complex Python objects.

As such, it is specific to Python and cannot be used to communicate with applications written in other languages.

It is also insecure by default: deserializing pickle data coming from an untrusted source can execute arbitrary code, if the data was crafted by a skilled attacker.

Python Dictionaries

A dictionary is a collection which is ordered, changeable and do not allow duplicates. Dictionaries are used to store data values in key:value pairs. Dictionaries cannot have two items with the same key. Duplicate values will overwrite existing values. thisdict = { "brand": "Ford", "model": "Mustang", "year": 1964 } print(thisdict["brand"])Python PDF

pip install fpdf

10 Python Scripts to Automate Your Daily Task

Parse and Extract HTML

Qrcode Scanner

Take Screenshots

Create AudioBooks

PDF Editor

👉Mini Stackoverflow

Automate Mobile Phone

Monitor CPU/GPU Temp

Instagram Uploader Bot

Video Watermarker

Qrcode Scanner

Take Screenshots

Create AudioBooks

PDF Editor

👉Mini Stackoverflow

Automate Mobile Phone

Monitor CPU/GPU Temp

Instagram Uploader Bot

Video Watermarker

Parse and Extract HTML

This automation script will help you to extract the HTML from the webpage URL and then also provide you function that you can use to Parse the HTML for data. This awesome script is a great treat for web scrapers and for those who want to Parse HTML for important data. # Parse and Extract HTML # pip install gazpacho import gazpacho # Extract HTML from URL url = 'https://www.example.com/' html = gazpacho.get(url) print(html) # Extract HTML with Headers headers = {'User-Agent': 'Mozilla/5.0'} html = gazpacho.get(url, headers=headers) print(html) # Parse HTML parse = gazpacho.Soup(html) # Find single tags tag1 = parse.find('h1') tag2 = parse.find('span') # Find multiple tags tags1 = parse.find_all('p') tags2 = parse.find_all('a') # Find tags by class tag = parse.find('.class') # Find tags by Attribute tag = parse.find("div", attrs={"class": "test"}) # Extract text from tags text = parse.find('h1').text text = parse.find_all('p')[0].textQrcode Scanner

Having a lot of Qr images or just want to scan a QR image then this automation script will help you with it. This script uses the Qrtools module that will enable you to scan your QR images programmatically. # Qrcode Scanner # pip install qrtools from qrtools import Qr def Scan_Qr(qr_img): qr = Qr() qr.decode(qr_img) print(qr.data) return qr.data print("Your Qr Code is: ", Scan_Qr("qr.png"))Take Screenshots

Now you can Take Screenshots programmatically by using this awesome script below. With this script, you can take a direct screenshots or take specific area screenshots too. # Grab Screenshot # pip install pyautogui # pip install Pillow from pyautogui import screenshot import time from PIL import ImageGrab # Grab Screenshot of Screen def grab_screenshot(): shot = screenshot() shot.save('my_screenshot.png') # Grab Screenshot of Specific Area def grab_screenshot_area(): area = (0, 0, 500, 500) shot = ImageGrab.grab(area) shot.save('my_screenshot_area.png') # Grab Screenshot with Delay def grab_screenshot_delay(): time.sleep(5) shot = screenshot() shot.save('my_screenshot_delay.png')Create AudioBooks

Tired of converting Your PDF books to Audiobooks manually, Then here is your automation script that uses the GTTS module that will convert your PDF text to audio. # Create Audiobooks # pip install gTTS # pip install PyPDF2 from PyPDF2 import PdfFileReader as reader from gtts import gTTS def create_audio(pdf_file): read_Pdf = reader(open(pdf_file, 'rb')) for page in range(read_Pdf.numPages): text = read_Pdf.getPage(page).extractText() tts = gTTS(text, lang='en') tts.save('page' + str(page) + '.mp3') create_audio('book.pdf')PDF Editor

Use this below automation script to Edit your PDF files with Python. This script uses the PyPDF4 module which is the upgrade version of PyPDF2 and below I coded the common function like Parse Text, Remove pages, and many more. Handy script when you have a lot of PDFs to Edit or need a script in your Python Project programmatically. # PDF Editor # pip install PyPDf4 import PyPDF4 # Parse the Text from PDF def parse_text(pdf_file): reader = PyPDF4.PdfFileReader(pdf_file) for page in reader.pages: print(page.extractText()) # Remove Page from PDF def remove_page(pdf_file, page_numbers): filer = PyPDF4.PdfReader('source.pdf', 'rb') out = PyPDF4.PdfWriter() for index in page_numbers: page = filer.pages[index] out.add_page(page) with open('rm.pdf', 'wb') as f: out.write(f) # Add Blank Page to PDF def add_page(pdf_file, page_number): reader = PyPDF4.PdfFileReader(pdf_file) writer = PyPDF4.PdfWriter() writer.addPage() with open('add.pdf', 'wb') as f: writer.write(f) # Rotate Pages def rotate_page(pdf_file): reader = PyPDF4.PdfFileReader(pdf_file) writer = PyPDF4.PdfWriter() for page in reader.pages: page.rotateClockwise(90) writer.addPage(page) with open('rotate.pdf', 'wb') as f: writer.write(f) # Merge PDFs def merge_pdfs(pdf_file1, pdf_file2): pdf1 = PyPDF4.PdfFileReader(pdf_file1) pdf2 = PyPDF4.PdfFileReader(pdf_file2) writer = PyPDF4.PdfWriter() for page in pdf1.pages: writer.addPage(page) for page in pdf2.pages: writer.addPage(page) with open('merge.pdf', 'wb') as f: writer.write(f)👉Mini Stackoverflow

As a programmer I know we need StackOverflow every day but you no longer need to go and search on Google for it. Now get direct solutions in your CMD while you continue working on a project. By using Howdoi module you can get the StackOverflow solution in your command prompt or terminal. Below you can find some examples that you can try. # Automate Stackoverflow # pip install howdoi # Get Answers in CMD #example 1 > howdoi how do i install python3 # example 2 > howdoi selenium Enter keys # example 3 > howdoi how to install modules # example 4 > howdoi Parse html with python # example 5 > howdoi int not iterable error # example 6 > howdoi how to parse pdf with python # example 7 > howdoi Sort list in python # example 8 > howdoi merge two lists in python # example 9 >howdoi get last element in list python # example 10 > howdoi fast way to sort listAutomate Mobile Phone

This automation script will help you to automate your Smart Phone by using the Android debug bridge (ADB) in Python. Below I show how you can automate common tasks like swipe gestures, calling, sending Sms, and much more. You can learn more about ADB and explore more exciting ways to automate your phones for making your life easier. # Automate Mobile Phones # pip install opencv-python import subprocess def main_adb(cm): p = subprocess.Popen(cm.split(' '), stdout=subprocess.PIPE, shell=True) (output, _) = p.communicate() return output.decode('utf-8') # Swipe def swipe(x1, y1, x2, y2, duration): cmd = 'adb shell input swipe {} {} {} {} {}'.format(x1, y1, x2, y2, duration) return main_adb(cmd) # Tap or Clicking def tap(x, y): cmd = 'adb shell input tap {} {}'.format(x, y) return main_adb(cmd) # Make a Call def make_call(number): cmd = f"adb shell am start -a android.intent.action.CALL -d tel:{number}" return main_adb(cmd) # Send SMS def send_sms(number, message): cmd = 'adb shell am start -a android.intent.action.SENDTO -d sms:{} --es sms_body "{}"'.format(number, message) return main_adb(cmd) # Download File From Mobile to PC def download_file(file_name): cmd = 'adb pull /sdcard/{}'.format(file_name) return main_adb(cmd) # Take a screenshot def screenshot(): cmd = 'adb shell screencap -p' return main_adb(cmd) # Power On and Off def power_off(): cmd = '"adb shell input keyevent 26"' return main_adb(cmd)Monitor CPU/GPU Temp

You Probably use CPU-Z or any specs monitoring software to capture your Cpu and Gpu temperature but you know you can do that programmatically too. Well, this script uses the Pythonnet and OpenhardwareMonitor that help you to monitor your current Cpu and Gpu Temperature. You can use it to notify yourself when a certain amount of temperature reaches or you can use it in your Python project to make your daily life easy. # Get CPU/GPU Temperature # pip install pythonnet import clr clr.AddReference("OpenHardwareMonitorLib") from OpenHardwareMonitorLib import * spec = Computer() spec.GPUEnabled = True spec.CPUEnabled = True spec.Open() # Get CPU Temp def Cpu_Temp(): while True: for cpu in range(0, len(spec.Hardware[0].Sensors)): if "/temperature" in str(spec.Hardware[0].Sensors[cpu].Identifier): print(str(spec.Hardware[0].Sensors[cpu].Value)) # Get GPU Temp def Gpu_Temp() while True: for gpu in range(0, len(spec.Hardware[0].Sensors)): if "/temperature" in str(spec.Hardware[0].Sensors[gpu].Identifier): print(str(spec.Hardware[0].Sensors[gpu].Value))Instagram Uploader Bot

Instagram is a well famous social media platform and you know you don’t need to upload your photos or video through your smartphone now. You can do it programmatically by using the below script. # Upload Photos and Video on Insta # pip install instabot from instabot import Bot def Upload_Photo(img): robot = Bot() robot.login(username="user", password="pass") robot.upload_photo(img, caption="Medium Article") print("Photo Uploaded") def Upload_Video(video): robot = Bot() robot.login(username="user", password="pass") robot.upload_video(video, caption="Medium Article") print("Video Uploaded") def Upload_Story(img): robot = Bot() robot.login(username="user", password="pass") robot.upload_story(img, caption="Medium Article") print("Story Photos Uploaded") Upload_Photo("img.jpg") Upload_Video("video.mp4")Video Watermarker

Add watermark to your videos by using this automation script which uses Moviepy which is a handy module for video editing. In the below script, you can see how you can watermark and you are free to use it. # Video Watermark with Python # pip install moviepy from moviepy.editor import * clip = VideoFileClip("myvideo.mp4", audio=True) width,height = clip.size text = TextClip("WaterMark", font='Arial', color='white', fontsize=28) set_color = text.on_color(size=(clip.w + text.w, text.h-10), color=(0,0,0), pos=(6,'center'), col_opacity=0.6) set_textPos = set_color.set_pos( lambda pos: (max(width/30,int(width-0.5* width* pos)),max(5*height/6,int(100* pos))) ) Output = CompositeVideoClip([clip, set_textPos]) Output.duration = clip.duration Output.write_videofile("output.mp4", fps=30, codec='libx264')macro inside an Excel file using Python

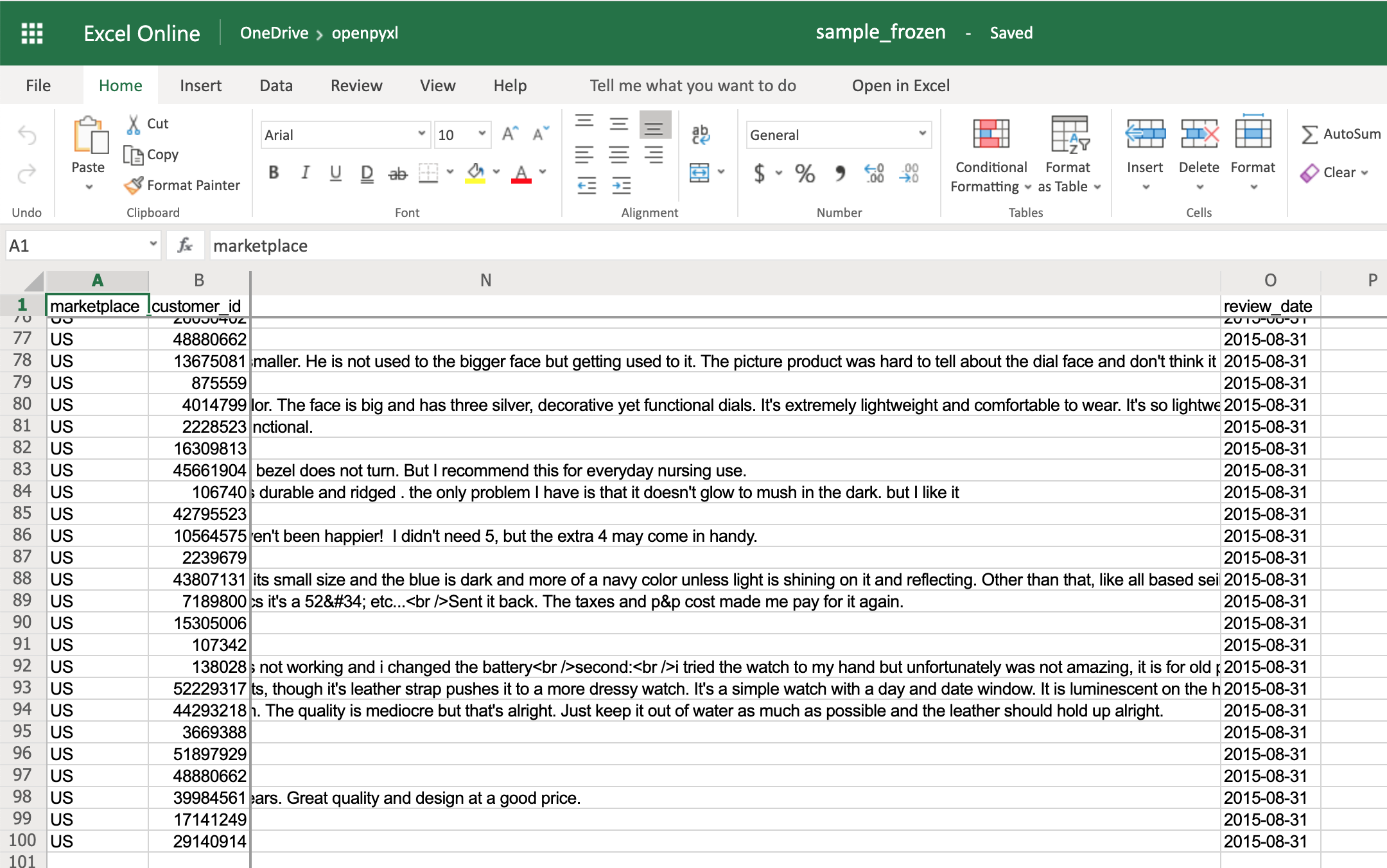

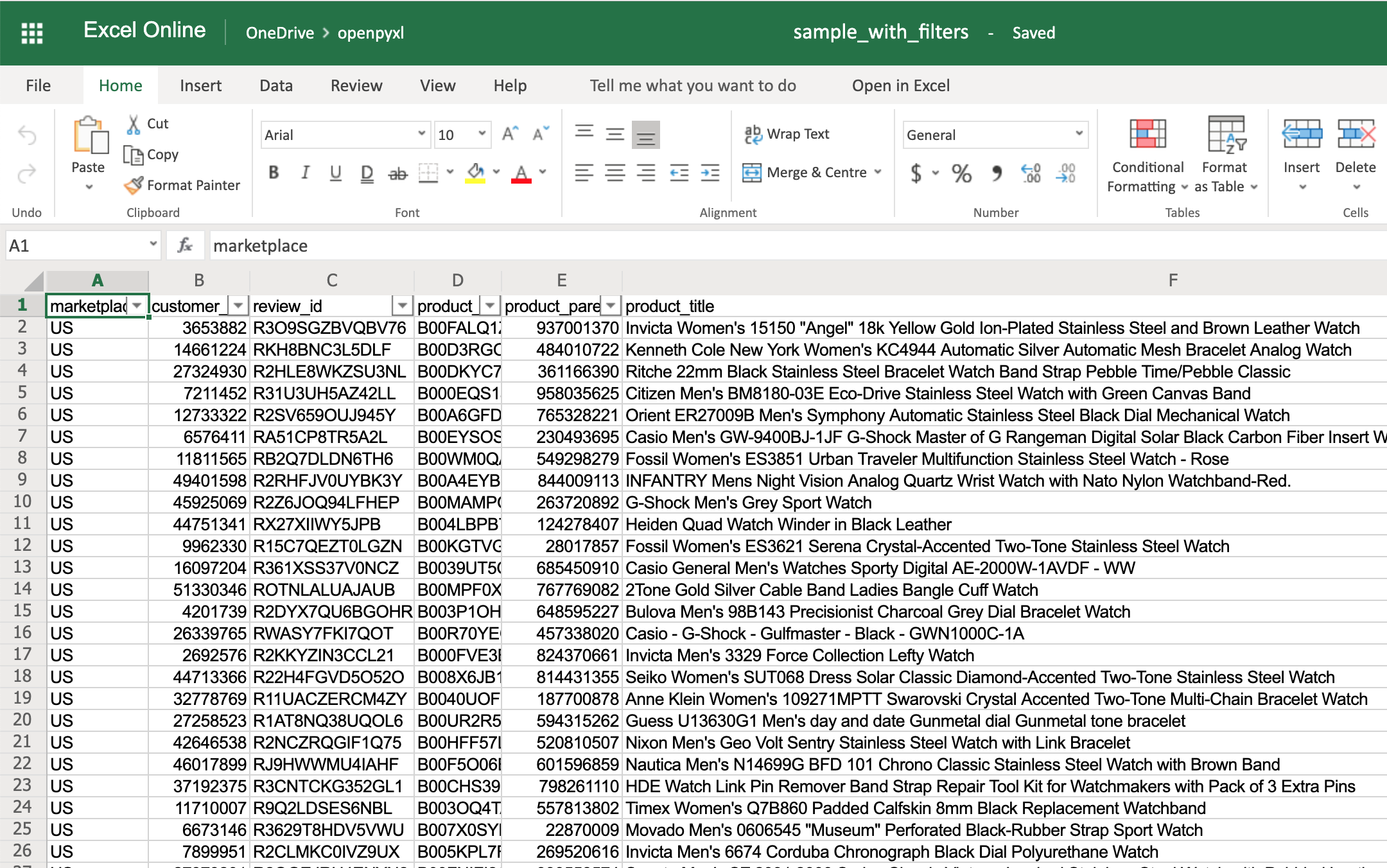

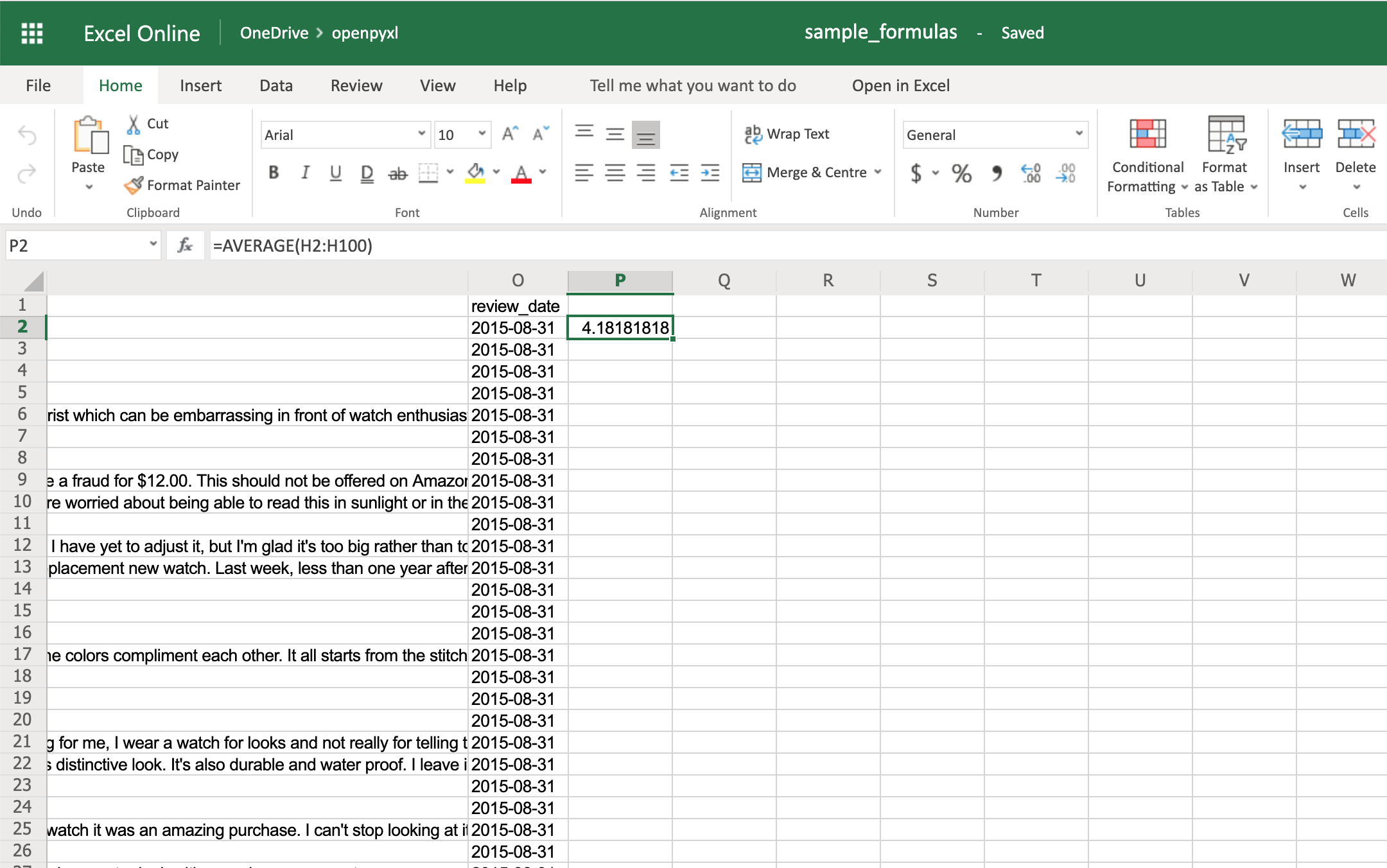

use the xlwings library. xlwings allows you to interact with Excel files and access macros. Install the xlwings library by running the following command in your Python environment: pip install xlwings import xlwings as xw Use the xw.Book() function to open the Excel file containing the macro: wb = xw.Book('path_to_your_excel_file.xlsx') Access the macro within the Excel file using the macro attribute of the Workbook object: macro_code = wb.macro('macro_name') Replace `'macro_name'` with the name of the macro you want to read. Print or manipulate the macro_code as needed: print(macro_code) You can save it to a file or process it further, depending on your requirements. Close the workbook after you have finished reading the macro: wb.close() To list out all macros Access the macros attribute of the Workbook object to obtain a list of all macros: macro_list = wb.macro_names Print or process the macro_list as needed: for macro_name in macro_list: print(macro_name)Python 处理 Excel 的 14 个常用操作

关联公式:Vlookup

vlookup是excel几乎最常用的公式,一般用于两个表的关联查询等。 所以我先把这张表分为两个表。df1=sale[['订单明细号','单据日期','地区名称', '业务员名称','客户分类', '存货编码', '客户名称', '业务员编码', '存货名称', '订单号', '客户编码', '部门名称', '部门编码']]

df2=sale[['订单明细号','存货分类', '税费', '不含税金额', '订单金额', '利润', '单价','数量']]

需求:想知道df1的每一个订单对应的利润是多少。

利润一列存在于df2的表格中,所以想知道df1的每一个订单对应的利润是多少。

用excel的话首先确认订单明细号是唯一值,然后在df1新增一列写:=vlookup(a2,df2!a:h,6,0) ,然后往下拉就ok了。

(剩下13个我就不写excel啦)

那用python是如何实现的呢?

#查看订单明细号是否重复,结果是没。

df1["订单明细号"].duplicated().value_counts()

df2["订单明细号"].duplicated().value_counts()

df_c=pd.merge(df1,df2,on="订单明细号",how="left")

数据透视表

需求:想知道每个地区的业务员分别赚取的利润总和与利润平均数。pd.pivot_table(sale,index="地区名称",columns="业务员名称",values="利润",aggfunc=[np.sum,np.mean])

对比两列差异

因为这表每列数据维度都不一样,比较起来没啥意义,所以我先做了个订单明细号的差异再进行比较。 需求:比较订单明细号与订单明细号2的差异并显示出来。sale["订单明细号2"]=sale["订单明细号"]

#在订单明细号2里前10个都+1.

sale["订单明细号2"][1:10]=sale["订单明细号2"][1:10]+1

#差异输出

result=sale.loc[sale["订单明细号"].isin(sale["订单明细号2"])==False]

去除重复值

需求:去除业务员编码的重复值sale.drop_duplicates("业务员编码",inplace=True)

缺失值处理

先查看销售数据哪几列有缺失值。#列的行数小于index的行数的说明有缺失值,这里客户名称329<335,说明有缺失值

sale.info()

需求:用0填充缺失值或则删除有客户编码缺失值的行。

实际上缺失值处理的办法是很复杂的,这里只介绍简单的处理方法,若是数值变量,最常用平均数或中位数或众数处理,比较复杂的可以用随机森林模型根据其他维度去预测结果填充。

若是分类变量,根据业务逻辑去填充准确性比较高。

比如这里的需求填充客户名称缺失值:就可以根据存货分类出现频率最大的存货所对应的客户名称去填充。

这里我们用简单的处理办法:用0填充缺失值或则删除有客户编码缺失值的行。

#用0填充缺失值

sale["客户名称"]=sale["客户名称"].fillna(0)

#删除有客户编码缺失值的行

sale.dropna(subset=["客户编码"])

多条件筛选

需求:想知道业务员张爱,在北京区域卖的商品订单金额大于6000的信息。sale.loc[(sale["地区名称"]=="北京")&(sale["业务员名称"]=="张爱")&(sale["订单金额"]>5000)]

模糊筛选数据

需求:筛选存货名称含有"三星"或则含有"索尼"的信息。sale.loc[sale["存货名称"].str.contains("三星|索尼")]

分类汇总

需求:北京区域各业务员的利润总额。sale.groupby(["地区名称","业务员名称"])["利润"].sum()

条件计算

需求:存货名称含“三星字眼”并且税费高于1000的订单有几个?这些订单的利润总和和平均利润是多少?(或者最小值,最大值,四分位数,标注差)sale.loc[sale["存货名称"].str.contains("三星")&(sale["税费"]>=1000)][["订单明细号","利润"]].describe()

删除数据间的空格

需求:删除存货名称两边的空格。sale["存货名称"].map(lambda s :s.strip(""))

数据分列

需求:将日期与时间分列。sale=pd.merge(sale,pd.DataFrame(sale["单据日期"].str.split(" ",expand=True)),how="inner",left_index=True,right_index=True)

异常值替换

首先用describe()函数简单查看一下数据有无异常值。#可看到销项税有负数,一般不会有这种情况,视它为异常值。

sale.describe()

需求:用0代替异常值。

sale["订单金额"]=sale["订单金额"].replace(min(sale["订单金额"]),0)

分组

需求:根据利润数据分布把地区分组为:"较差","中等","较好","非常好" 首先,当然是查看利润的数据分布呀,这里我们采用四分位数去判断。sale.groupby("地区名称")["利润"].sum().describe()

根据四分位数把地区总利润为[-9,7091]区间的分组为“较差”,(7091,10952]区间的分组为"中等" (10952,17656]分组为较好,(17656,37556]分组为非常好。

#先建立一个Dataframe

sale_area=pd.DataFrame(sale.groupby("地区名称")["利润"].sum()).reset_index()

#设置bins,和分组名称

bins=[-10,7091,10952,17656,37556]

groups=["较差","中等","较好","非常好"]

#使用cut分组

#sale_area["分组"]=pd.cut(sale_area["利润"],bins,labels=groups)

根据业务逻辑定义标签

需求:销售利润率(即利润/订单金额)大于30%的商品信息并标记它为优质商品,小于5%为一般商品。sale.loc[(sale["利润"]/sale["订单金额"])>0.3,"label"]="优质商品"

sale.loc[(sale["利润"]/sale["订单金额"])<0.05,"label"]="一般商品"

其实excel常用的操作还有很多,我就列举了14个自己比较常用的,若还想实现哪些操作可以评论一起交流讨论,另外我自身也知道我写python不够精简,惯性使用loc。

(其实query会比较精简)。

最后想说说,我觉得最好不要拿excel和python做对比,去研究哪个好用,其实都是工具,excel作为最为广泛的数据处理工具,垄断这么多年必定在数据处理方便也是相当优秀的,有些操作确实python会比较简单,但也有不少excel操作起来比python简单的。

比如一个很简单的操作:对各列求和并在最下一行显示出来,excel就是对一列总一个sum()函数,然后往左一拉就解决,而python则要定义一个函数(因为python要判断格式,若非数值型数据直接报错。

)

总结一下就是:无论用哪个工具,能解决问题就是好数据分析师!

List Comprehension

List Comprehension

The Syntax

Condition

Example Only accept items that are not "apple":

Example With no

Iterable

Example You can use the

Example Accept only numbers lower than 5:

Expression

Example Set the values in the new list to upper case:

Example Set all values in the new list to 'hello':

Example Return "orange" instead of "banana":

1、For 循环 2、 While 循环 3、IF Else 语句 4、合并字典 5、编写函数 6、单行递归 7、数组过滤 8、异常处理 9、列出字典 10、多变量赋值 11、交换 12、排序 13、读取文件 14、类 15、分号 16、打印 17、Map 函数 18、删除列表中的 Mul 元素 19、打印图案 20、查找质数

The Syntax

Condition

Example Only accept items that are not "apple":

Example With no

if statement: Iterable

Example You can use the

range() function to create an iterable: Example Accept only numbers lower than 5:

Expression

Example Set the values in the new list to upper case:

Example Set all values in the new list to 'hello':

Example Return "orange" instead of "banana":

1、For 循环 2、 While 循环 3、IF Else 语句 4、合并字典 5、编写函数 6、单行递归 7、数组过滤 8、异常处理 9、列出字典 10、多变量赋值 11、交换 12、排序 13、读取文件 14、类 15、分号 16、打印 17、Map 函数 18、删除列表中的 Mul 元素 19、打印图案 20、查找质数

Python Comprehensions

Using Python Comprehensionspython list comprehension exercise K’th Non-repeating Character in Python using List Comprehension and OrderedDict

List Comprehension

List comprehension offers a shorter syntax when you want to create a new list based on the values of an existing list. Example: Based on a list of fruits, you want a new list, containing only the fruits with the letter "a" in the name. Without list comprehension you will have to write afor statement with a conditional test inside:

Example fruits = ["apple", "banana", "cherry", "kiwi", "mango"]

newlist = []

for x in fruits:

if "a" in x:

newlist.append(x)

print(newlist)

With list comprehension you can do all that with only one line of code:

Example fruits = ["apple", "banana", "cherry", "kiwi", "mango"]

newlist = [x for x in fruits if "a" in x]

print(newlist)

The Syntax

newlist = [expression for item in iterable if condition == True]

The return value is a new list, leaving the old list unchanged.

Condition

The condition is like a filter that only accepts the items that valuate toTrue.

Example Only accept items that are not "apple":

newlist = [x for x in fruits if x != "apple"]

The condition

if x != "apple" will return True for all elements other than "apple", making the new list contain all fruits except "apple".

The condition is optional and can be omitted:

Example With no if statement:

newlist = [x for x in fruits]

Iterable

The iterable can be any iterable object, like a list, tuple, set etc.

Example You can use the range() function to create an iterable:

newlist = [x for x in range(10)]

Same example, but with a condition:

Example Accept only numbers lower than 5:

newlist = [x for x in range(10) if x < 5]

Expression

The expression is the current item in the iteration, but it is also the outcome, which you can manipulate before it ends up like a list item in the new list:

Example Set the values in the new list to upper case:

newlist = [x.upper() for x in fruits]

You can set the outcome to whatever you like:

Example Set all values in the new list to 'hello':

newlist = ['hello' for x in fruits]

The expression can also contain conditions, not like a filter, but as a way to manipulate the outcome:

Example Return "orange" instead of "banana":

newlist = [x if x != "banana" else "orange" for x in fruits]

The expression in the example above says:

"Return the item if it is not banana, if it is banana return orange".

1、For 循环 for 循环是一个多行语句,但是在 Python 中,我们可以使用 List Comprehension 方法在一行中编写 for 循环。 让我们以过滤小于 250 的值为例。 示例代码如下:

#For loop in One line

mylist = [100, 200, 300, 400, 500]

#Orignal way

result = []

for x in mylist:

if x > 250:

result.append(x)

print(result) # [300, 400, 500]

#One Line Way

result = [x for x in mylist if x > 250]

print(result) # [300, 400, 500]

2、 While 循环

这个 One-Liner 片段将向您展示如何在 One Line 中使用 While 循环代码,在这里,我已经展示了两种方法。

代码如下:

#method 1 Single Statement

while True: print(1) # infinite 1

#method 2 Multiple Statement

x = 0

while x < 5: print(x); x= x + 1 # 0 1 2 3 4 5

3、IF Else 语句

好吧,要在 One Line 中编写 IF Else 语句,我们将使用三元运算符。

三元的语法是“[on true] if [expression] else [on false]”。

我在下面的示例代码中展示了 3 个示例,以使您清楚地了解如何将三元运算符用于一行 if-else 语句,要使用 Elif 语句,我们必须使用多个三元运算符。

#if Else in One Line

#Example 1 if else

print("Yes") if 8 > 9 else print("No") # No

#Example 2 if elif else

E = 2

print("High") if E == 5 else print("Meidum") if E == 2 else print("Low") # Medium

#Example 3 only if

if 3 > 2: print("Exactly") # Exactly

4、合并字典

这个单行代码段将向您展示如何使用一行代码将两个字典合并为一个。

下面我展示了两种合并字典的方法。

# Merge Dictionary in One Line

d1 = { 'A': 1, 'B': 2 }

d2 = { 'C': 3, 'D': 4 }

#method 1

d1.update(d2)

print(d1) # {'A': 1, 'B': 2, 'C': 3, 'D': 4}

#method 2

d3 = {**d1, **d2}

print(d3) # {'A': 1, 'B': 2, 'C': 3, 'D': 4}

5、编写函数

我们有两种方法可以在一行中编写函数,在第一种方法中,我们将使用与三元运算符或单行循环方法相同的函数定义。

第二种方法是用 lambda 定义函数,查看下面的示例代码以获得更清晰的理解。

#Function in One Line

#method 1

def fun(x): return True if x % 2 == 0 else False

print(fun(2)) # False

#method 2

fun = lambda x : x % 2 == 0

print(fun(2)) # True

print(fun(3)) # False

6、单行递归

这个单行代码片段将展示如何在一行中使用递归,我们将使用一行函数定义和一行 if-else 语句,下面是查找斐波那契数的示例。

# Recursion in One Line

#Fibonaci example with one line Recursion

def Fib(x): return 1 if x in {0, 1} else Fib(x-1) + Fib(x-2)

print(Fib(5)) # 8

print(Fib(15)) # 987

7、数组过滤

Python 列表可以通过使用列表推导方法在一行代码中进行过滤,让我们以过滤偶数列表为例。

# Array Filtering in One Line

mylist = [2, 3, 5, 8, 9, 12, 13, 15]

#Normal Way

result = []

for x in mylist:

if x % 2 == 0:

result.append(x)

print(result) # [2, 8, 12]

#One Line Way

result = [x for x in mylist if x % 2 == 0]

print(result) # [2, 8, 12]

8、异常处理

我们使用异常处理来处理 Python 中的运行时错误,你知道我们可以在 One-Line 中编写这个 Try except 语句吗?通过使用 exec() 语句,我们可以做到这一点。

# Exception Handling in One Line

#Original Way

try:

print(x)

except:

print("Error")

#One Line Way

exec('try:print(x) \nexcept:print("Error")') # Error

9、列出字典

我们可以使用 Python enumerate() 函数将 List 转换为 Dictionary in One Line,在 enumerate() 中传递列表并使用 dict() 将最终输出转换为字典格式。

# Dictionary in One line

mydict = ["John", "Peter", "Mathew", "Tom"]

mydict = dict(enumerate(mydict))

print(mydict) # {0: 'John', 1: 'Peter', 2: 'Mathew', 3: 'Tom'}

10、多变量赋值

Python 允许在一行中进行多个变量赋值,下面的示例代码将向您展示如何做到这一点。

#Multi Line Variable

#Normal Way

x = 5

y = 7

z = 10

print(x , y, z) # 5 7 10

#One Line way

a, b, c = 5, 7, 10

print(a, b, c) # 5 7 10

11、交换

交换是编程中一项有趣的任务,并且总是需要第三个变量名称 temp 来保存交换值。

这个单行代码段将向您展示如何在没有任何临时变量的情况下交换一行中的值。

#Swap in One Line

#Normal way

v1 = 100

v2 = 200

temp = v1

v1 = v2

v2 = temp

print(v1, v2) # 200 100

# One Line Swapping

v1, v2 = v2, v1

print(v1, v2) # 200 100

12、排序

排序是编程中的一个普遍问题,Python 有许多内置的方法来解决这个排序问题,下面的代码示例将展示如何在一行中进行排序。

# Sort in One Line

mylist = [32, 22, 11, 4, 6, 8, 12]

# method 1

mylist.sort()

print(mylist) # # [4, 6, 8, 11, 12, 22, 32]

print(sorted(mylist)) # [4, 6, 8, 11, 12, 22, 32]

13、读取文件

不使用语句或正常读取方法,也可以正确读取一行文件。

#Read File in One Line

#Normal Way

with open("data.txt", "r") as file:

data = file.readline()

print(data) # Hello world

#One Line Way

data = [line.strip() for line in open("data.txt","r")]

print(data) # ['hello world', 'Hello Python']

14、类

类总是多线工作,但是在 Python 中,有一些方法可以在一行代码中使用类特性。

# Class in One Line

#Normal way

class Emp:

def __init__(self, name, age):

self.name = name

self.age = age

emp1 = Emp("Haider", 22)

print(emp1.name, emp1.age) # Haider 22

#One Line Way

#method 1 Lambda with Dynamic Artibutes

Emp = lambda: None; Emp.name = "Haider"; Emp.age = 22

print(Emp.name, Emp.age) # Haider 22

#method 2

from collections import namedtuple

Emp = namedtuple('Emp', ["name", "age"]) ("Haider", 22)

print(Emp.name, Emp.age) # Haider 22

15、分号

一行代码片段中的分号将向您展示如何使用分号在一行中编写多行代码。

# Semi colon in One Line

#example 1

a = "Python"; b = "Programming"; c = "Language"; print(a, b, c)

#output:

# Python Programming Language

16、打印

这不是很重要的 Snippet,但有时当您不需要使用循环来执行任务时它很有用。

# Print in One Line

#Normal Way

for x in range(1, 5):

print(x) # 1 2 3 4

#One Line Way

print(*range(1, 5)) # 1 2 3 4

print(*range(1, 6)) # 1 2 3 4 5

17、Map 函数

Map 函数是适用的高阶函数,这将函数应用于每个元素,下面是我们如何在一行代码中使用 map 函数的示例。

#Map in One Line

print(list(map(lambda a: a + 2, [5, 6, 7, 8, 9, 10])))

#output

# [7, 8, 9, 10, 11, 12]

18、删除列表中的 Mul 元素

您现在可以使用 del 方法在一行代码中删除 List 中的多个元素,只需稍作修改。

# Delete Mul Element in One Line

mylist = [100, 200, 300, 400, 500]

del mylist[1::2]

print(mylist) # [100, 300, 500]

19、打印图案

现在您不再需要使用 Loop 来打印相同的图案,您可以使用 Print 语句和星号 (*) 在一行代码中执行相同的操作。

# Print Pattern in One Line

# Normal Way

for x in range(3):

print('😀')

# output

# 😀 😀 😀

#One Line way

print('😀' * 3) # 😀 😀 😀

print('😀' * 2) # 😀 😀

print('😀' * 1) # 😀

20、查找质数

此代码段将向您展示如何编写单行代码来查找范围内的质数。

# Find Prime Number

print(list(filter(lambda a: all(a % b != 0 for b in range(2, a)), range(2,20))))

#Output

# [2, 3, 5, 7, 11, 13, 17, 19]

student info management

# 学生信息放在字典里面 student_info = [ {'姓名': '婧琪', '语文': 60, '数学': 60, '英语': 60, '总分': 180}, {'姓名': '巳月', '语文': 60, '数学': 60, '英语': 60, '总分': 180}, {'姓名': '落落', '语文': 60, '数学': 60, '英语': 60, '总分': 180}, ] # 死循环 while True # 源码自取君羊:708525271 while True: print(msg) num = input('请输入你想要进行操作: ') # 进行判断, 判断输入内容是什么, 然后返回相应结果 if num == '1': name = input('请输入学生姓名: ') chinese = int(input('请输入语文成绩: ')) math = int(input('请输入数学成绩: ')) english = int(input('请输入英语成绩: ')) score = chinese + math + english # 总分 student_dit = { # 把信息内容, 放入字典里面 '姓名': name, '语文': chinese, '数学': math, '英语': english, '总分': score, } student_info.append(student_dit) # 把学生信息 添加到列表里面 elif num == '2': print('姓名\t\t语文\t\t数学\t\t英语\t\t总分') for student in student_info: print( student['姓名'], '\t\t', student['语文'], '\t\t', student['数学'], '\t\t', student['英语'], '\t\t', student['总分'], ) elif num == '3': name = input('请输入查询学生姓名: ') for student in student_info: if name == student['姓名']: # 判断 查询名字和学生名字 是否一致 print('姓名\t\t语文\t\t数学\t\t英语\t\t总分') print( student['姓名'], '\t\t', student['语文'], '\t\t', student['数学'], '\t\t', student['英语'], '\t\t', student['总分'], ) break else: print('查无此人, 没有{}学生信息!'.format(name)) elif num == '4': name = input('请输入删除学生姓名: ') for student in student_info: if name == student['姓名']: print('姓名\t\t语文\t\t数学\t\t英语\t\t总分') print( student['姓名'], '\t\t', student['语文'], '\t\t', student['数学'], '\t\t', student['英语'], '\t\t', student['总分'], ) choose = input(f'是否确定要删除{name}信息(y/n)') if choose == 'y' or choose == 'Y': student_info.remove(student) print(f'{name}信息已经被删除!') break elif choose == 'n' or choose == 'N': break else: print('查无此人, 没有{}学生信息!'.format(name)) elif num == '5': print('修改学生信息') name = input('请输入删除学生姓名: ') for student in student_info: if name == student['姓名']: print('姓名\t\t语文\t\t数学\t\t英语\t\t总分') print( student['姓名'], '\t\t', student['语文'], '\t\t', student['数学'], '\t\t', student['英语'], '\t\t', student['总分'], ) choose = input(f'是否要修改{name}信息(y/n)') if choose == 'y' or choose == 'Y': name = input('请输入学生姓名: ') chinese = int(input('请输入语文成绩: ')) math = int(input('请输入数学成绩: ')) english = int(input('请输入英语成绩: ')) score = chinese + math + english # 总分 student['姓名'] = name student['语文'] = chinese student['数学'] = math student['英语'] = english student['总分'] = score print(f'{name}信息已经修改了!') break elif choose == 'n' or choose == 'N': # 跳出循环 break else: print('查无此人, 没有{}学生信息!'.format(name))if else 升级新语法

Python 从 if else 优化到 match case

Python 是一门非常重 if else 的语言

以前 Python 真的是把 if else 用到了极致,比如说 Python 里面没有三元运算符( xx ? y : z ) 无所谓,它可以用 if else 整一个。

x = True if 100 > 0 else False 离谱的事还没有完,if else 这两老六还可以分别与其它语法结合,其中又数 else 玩的最野。

a: else 可以和 try 玩到一起,当 try 中没有引发异常的时候 else 块会得到执行。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

def main():

try:

# ...

pass

except Exception as err:

pass

else:

print("this is else block")

finally:

print("finally block")

if __name__ == "__main__":

main() b: else 也可以配合循环语句使用,当循环体中没有执行 break 语句时 else 块能得到执行。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

def main():

for i in range(3):

pass

else:

print("this is else block")

while False:

pass

else:

print("this is else block")

if __name__ == "__main__":

main()

c: if 相对来说就没有 else 那么多的副业;常见的就是列表推导。

以过滤出列表中的偶数为例,传统上我们的代码可能是这样的。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

def main():

result = []

numers = [1, 2, 3, 4, 5]

for number in numers:

if number % 2 == 0:

result.append(number)

print(result)

if __name__ == "__main__":

main() 使用列表推导可以一行解决。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

def main():

numers = [1, 2, 3, 4, 5]

print( [_ for _ in numers if _ % 2 == 0] )

if __name__ == "__main__":

main() 看起来这些增强都还可以,但是对于类似于 switch 的这些场景,就不理想了。

没有 switch 语句 if else 顶上

对于 Python 这种把 if else 在语法上用到极致的语言,没有 switch 语句没关系的,它可以用 if else !!!

#!/usr/bin/env python3

# -*- coding: utf8 -*-

def fun(times):

"""这个函数不是我们测试的重点这里直接留白

Parameter

---------

times: int

"""

pass

def main(case_id: int):

"""由 case_id 到调用函数还有其它逻辑,这里为了简单统一处理在 100 * case_id

Parameter

---------

times: int

"""

if case_id == 1:

fun(100 * 1)

elif case_id == 2:

fun(100 * 2)

elif case_id == 3:

fun(100 * 3)

elif case_id == 4:

fun(100 * 4)

if __name__ == "__main__":

main(1) 这个代码写出来大家应该发现了,这样的代码像流水账一样一点都不优雅,用 Python 的话来说,这个叫一点都不 Pythonic !其它语言不好说,对于 Python 来讲不优雅就是有罪。

前面铺垫了这么多,终于快到重点了。

社区提出了一个相对优雅的写法,新写法完全不用 if else 。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

def fun(times):

pass

# 用字典以 case 为键,要执行的函数对象为值,这样做到按 case 路由

routers = {

1: fun,

2: fun,

3: fun,

4: fun

}

def main(case_id: int):

routers[case_id](100 * case_id)

if __name__ == "__main__":

main(1) 可以看到新的写法下,代码确实简洁了不少;从另一个角度来看社区也完成了一次进化,从之前抱着 if else 这个传家宝不放,到完全不用 if else 。

也算是非常有意思吧。

新写法也不是没有问题;性能!性能!还是他妈的性能不行!

if else 和宝典写法性能测试

在说测试结果之前,先介绍一下我的开发环境,腾讯云的虚拟机器,Python 版本是 Python-3.12.0a3 。

测试代码会记录耗时和内存开销,耗时小的性能就好。

详细的代码如下。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

import timeit

import tracemalloc

tracemalloc.start()

def fun(times):

"""这个函数不是我们测试的重点这里直接留白

Parameter

---------

times: int

"""

pass

# 定义 case 到 操作的路由字典

routers = {

1: fun,

2: fun,

3: fun,

4: fun

}

def main(case_id: int):

"""用于测试 if else 写法的耗时情况

Parametr

--------

case_id: int

不同 case 的唯一标识

Return

------

None

"""

if case_id == 1:

fun(100 * 1)

elif case_id == 2:

fun(100 * 2)

elif case_id == 3:

fun(100 * 3)

elif case_id == 4:

fun(100 * 4)

def main(case_id: int):

"""测试字典定法的耗时情况

Parametr

--------

case_id: int

不同 case 的唯一标识

Return

------

None

"""

routers[case_id](100 * case_id)

if __name__ == "__main__":

# 1. 记录开始时间、内存

# 2. 性能测试

# 3. 记录结束时间和总的耗时情况

start_current, start_peak = tracemalloc.get_traced_memory()

start_at = timeit.default_timer()

for i in range(10000000):

main((i % 4) + 1)

end_at = timeit.timeit()

cost = timeit.default_timer() - start_at

end_current, end_peak = tracemalloc.get_traced_memory()

print(f"time cost = {cost} .")

print(f"memery cost = {end_current - start_current}, {end_peak - start_peak}") 下面直接上我在开发环境的测试结果。

文字版本。

文字版本。

可以看到字典写法虽然优雅了一些,但是它在性能上是不行的。

故事讲到这里,我们这次的主角要上场了。

match case 新语法

Python-3.10 版本引入了一个新的语法 match case ,这个新语法和其它语言的 switch case 差不多。

在性能上比字典写法好一点,在代码的优雅程度上比 if else 好一点。

大致语法像这样。

可以看到字典写法虽然优雅了一些,但是它在性能上是不行的。

故事讲到这里,我们这次的主角要上场了。

match case 新语法

Python-3.10 版本引入了一个新的语法 match case ,这个新语法和其它语言的 switch case 差不多。

在性能上比字典写法好一点,在代码的优雅程度上比 if else 好一点。

大致语法像这样。

match xxx:

case aaa:

...

case bbb:

...

case ccc:

...

case ddd:

...

光说不练,假把式!改一下我们的测试代码然后比较一下三者的性能差异。

#!/usr/bin/env python3

# -*- coding: utf8 -*-

import timeit

import tracemalloc

tracemalloc.start()

def fun(times):

"""这个函数不是我们测试的重点这里直接留白

Parameter

---------

times: int

"""

pass

# 定义 case 到 操作的路由字典

routers = {

1: fun,

2: fun,

3: fun,

4: fun

}

def main(case_id: int):

"""用于测试 if else 写法的耗时情况

Parametr

--------

case_id: int

不同 case 的唯一标识

Return

------

None

"""

if case_id == 1:

fun(100 * 1)

elif case_id == 2:

fun(100 * 2)

elif case_id == 3:

fun(100 * 3)

elif case_id == 4:

fun(100 * 4)

def main(case_id: int):

"""测试字典定法的耗时情况

Parametr

--------

case_id: int

不同 case 的唯一标识

Return

------

None

"""

routers[case_id](100 * case_id)

def main(case_id: int):

"""测试 match case 写法的耗时情况

Parametr

--------

case_id: int

不同 case 的唯一标识

Return

------

None

"""

match case_id:

case 1:

fun(100 * 1)

case 2:

fun(100 * 2)

case 3:

fun(100 * 3)

case 4:

fun(100 * 4)

if __name__ == "__main__":

# 1. 记录开始时间、内存

# 2. 性能测试

# 3. 记录结束时间和总的耗时情况

start_current, start_peak = tracemalloc.get_traced_memory()

start_at = timeit.default_timer()

for i in range(10000000):

main((i % 4) + 1)

end_at = timeit.timeit()

cost = timeit.default_timer() - start_at

end_current, end_peak = tracemalloc.get_traced_memory()

print(f"time cost = {cost} .")

print(f"memery cost = {end_current - start_current}, {end_peak - start_peak}")

可以看到 match case 耗时还是比较理想的。

详细的数据如下。

详细的数据如下。

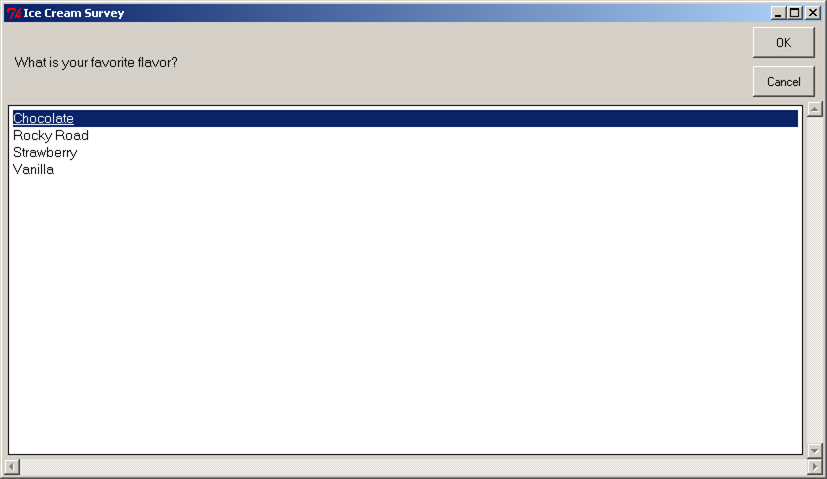

20 Python libraries

Requests.

The most famous http library written by Kenneth Reitz.

It's a must have for every python developer.

Scrapy.

If you are involved in webscraping then this is a must have library for you.

After using this library you won't use any other.

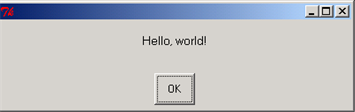

wxPython.

A gui toolkit for python.

I have primarily used it in place of tkinter.

You will really love it.

Pillow.

A friendly fork of PIL (Python Imaging Library).

It is more user friendly than PIL and is a must have for anyone who works with images.

SQLAlchemy.

A database library.

Many love it and many hate it.

The choice is yours.

BeautifulSoup.

I know it's slow but this xml and html parsing library is very useful for beginners.

Twisted.

The most important tool for any network application developer.

It has a very beautiful api and is used by a lot of famous python developers.

NumPy.

How can we leave this very important library ? It provides some advance math functionalities to python.

SciPy.

When we talk about NumPy then we have to talk about scipy.

It is a library of algorithms and mathematical tools for python and has caused many scientists to switch from ruby to python.

matplotlib.

A numerical plotting library.

It is very useful for any data scientist or any data analyzer.

Pygame.

Which developer does not like to play games and develop them ? This library will help you achieve your goal of 2d game development.

Pyglet.

A 3d animation and game creation engine.

This is the engine in which the famous python port of minecraft was made

pyQT.

A GUI toolkit for python.

It is my second choice after wxpython for developing GUI's for my python scripts.

pyGtk.

Another python GUI library.

It is the same library in which the famous Bittorrent client is created.

Scapy.

A packet sniffer and analyzer for python made in python.

pywin32.

A python library which provides some useful methods and classes for interacting with windows.

nltk.

Natural Language Toolkit – I realize most people won’t be using this one, but it’s generic enough.

It is a very useful library if you want to manipulate strings.

But it's capacity is beyond that.

Do check it out.

nose.

A testing framework for python.

It is used by millions of python developers.

It is a must have if you do test driven development.

SymPy.

SymPy can do algebraic evaluation, differentiation, expansion, complex numbers, etc.

It is contained in a pure Python distribution.

IPython.

I just can’t stress enough how useful this tool is.

It is a python prompt on steroids.

It has completion, history, shell capabilities, and a lot more.

Make sure that you take a look at it.

Python实现文本自动播读

用Python代码实现文本自动播放功能,主要有5步。第一步:

导入需要的依赖库。 这里面主要用到两个库:

(1)requests库:

作用是利用百度接口将文本解析为音频(2)os库:

用于播放音频![]()

第二步:

获取百度接口将文本解析的access_token。

主要是通过requests库的get方法获取access_token 。

备注:

ak和sk需要在百度云注册获取,请小伙伴们别忘了!

![]()

第三步:将文本解析为音频流。 主要是通过requests库的get方法获取文本对应的音频流。

![]()

第四步:将解析的音频流保存。 主要是通过文件操作的write方法将文本对应的音频流写入保存。

![]()

第五步:

播放保存的音频流文件。

![]() 具体的代码

具体的代码

![]()

Python速查表

基础

神经网络

![]()

![]()

线性代数

![]()

python基础

![]()

scipy科学计算

![]()

spark

![]()

数据保存及可视化

numpy

![]()

pandas

![]()

![]()

![]()

bokeh

![]()

画图

matplotlib

![]()

ggplot

![]()

![]()

机器学习

sklearn

![]()

![]()

keras

![]()

tensorflow

![]()

算法

数据结构

![]()

复杂度

![]()

排序算法

![]()

欧拉定理和图论

图式理论起源于18世纪,有一个有趣的故事。

柯尼斯堡是历史上普鲁士(今俄罗斯)的一个城市,有7座桥梁横跨普雷格尔河。

![]() 有人问:

有没有可能绕着柯尼斯堡走一圈,正好穿过每座桥一次?

请注意,我们正好在开始的地方完成,这并不重要。

我们将在学习了一些术语后再来讨论这个问题。

有人问:

有没有可能绕着柯尼斯堡走一圈,正好穿过每座桥一次?

请注意,我们正好在开始的地方完成,这并不重要。

我们将在学习了一些术语后再来讨论这个问题。

图 Graph图是一种数学结构,由以下部分组成。

顶点(也叫节点或点)(V),它们通过以下方式相连边(也叫链接或线)(E)它被表示为G = (V, E)Degree 一个特定顶点的边的数量被称为它的degree

![]() 一个有6个顶点和7条边的图图在计算机科学中通常用来描述不同对象之间的关系。

例如,Facebook作为一个图表示不同的人(顶点)和他们的关系(边)。

同样地,维基百科的编辑(边)在夏天的一个月里对不同的维基百科语言版本(顶点)做出了贡献,可以被描述为一个图,如下所示。

一个有6个顶点和7条边的图图在计算机科学中通常用来描述不同对象之间的关系。

例如,Facebook作为一个图表示不同的人(顶点)和他们的关系(边)。

同样地,维基百科的编辑(边)在夏天的一个月里对不同的维基百科语言版本(顶点)做出了贡献,可以被描述为一个图,如下所示。

![]() 图片来自维基百科以上面的柯尼斯堡为例,这个城市与河流及其桥梁可以用图示来描述,如下所示。

图片来自维基百科以上面的柯尼斯堡为例,这个城市与河流及其桥梁可以用图示来描述,如下所示。

![]() 欧拉首先用图形表示上述图表,如下图所示。

欧拉首先用图形表示上述图表,如下图所示。

![]() 他用一个顶点或节点来描述每块土地,用一条边来描述每座桥。

柯尼斯堡的普雷格尔河的图形表示(图片来自维基百科)欧拉提出了一个定理,指出:

他用一个顶点或节点来描述每块土地,用一条边来描述每座桥。

柯尼斯堡的普雷格尔河的图形表示(图片来自维基百科)欧拉提出了一个定理,指出:

如果除了最多两座桥之外,所有的桥都有一个 "偶数度",那么一个城市的桥就可以准确地被穿越一次。

看一下代表柯尼斯堡的图,每个顶点都有一个奇数,因此不可能绕着城市走,准确地穿过每座桥一次。

这个定理催生了现代图论,即对图的研究。

图和类型

有向图/二维图一个边有方向性的图。

这意味着,一条边只能在一个方向上穿过。

例如,一个代表Medium通讯和其订阅者的图。

无向图 一个边没有方向的图。

这意味着一条边可以双向穿越。

例如,一个代表Facebook上朋友之间关系的图。

循环 循环是一个图形,它的一些顶点(至少3个)以封闭链的形式连接。

循环图它是一个至少有一个周期的图。

![]() 一个有向循环图

一个有向循环图非循环图 它是一个没有循环的图。

连接图 它是一个具有从任何顶点到另一个顶点的边的图形。

它可以是:

![]()

强连接 :

如果所有顶点之间存在任何双向的边连接弱连接 :

如果所有顶点之间没有双向的连接 无连接的图形 一个没有连接顶点的图形被称为断开连接的图形。

中心性算法 中心性算法可用于分析整个图,以了解该图中的哪些节点对网络的影响最大。

然而, 要用算法衡量网络中节点的影响力,我们必须首先定义“影响力”在图上的含义。

这因算法而异,并且在尝试决定选择哪种中心性算法时是一个很好的起点。

度中心性 使用节点的平均度 来衡量它对图的影响有多大Closeness Centrality使用 给定节点与所有其他节点之间的反距离距离来了解节点在图中的中心程度Betweenness Centrality使用最短路径来确定哪些节点充当图中的中心“桥梁”,以识别网络中的关键瓶颈PageRank使用一组随机游走来衡量给定节点对网络的影响力。

通过测量哪些节点更有可能在随机游走中被访问。

请注意,PageRank 通过偶尔跳到图中的随机点而不是直接跳跃来解决随机游走面临的断开连接的图问题。

这允许算法探索图中甚至断开连接的部分。

PageRank 以谷歌创始人拉里佩奇的名字命名,被开发为谷歌搜索引擎的支柱,并使其在互联网的早期阶段超越了所有竞争对手的表现。

度中心性使用节点的平均度来衡量它对图的影响有多大Closeness Centrality使用 给定节点与所有其他节点之间的反距离距离来了解节点在图中的中心程度。

寻路和搜索算法

另一个基础图算法家族是图最短路径算法。

正如我们在关于图遍历算法(又名寻路算法)的文章中探讨的那样,最短路径算法通常有两种形式,具体取决于问题的性质以及您希望如何探索图以最终找到最短路径。

深度优先搜索,首先尽可能深入地遍历图形,然后返回起点并进行另一次深度路径遍历广度优先搜索,使其遍历尽可能靠近起始节点,并且只有在耗尽最接近它的所有可能路径时才冒险深入到图中寻路被用在许多用例中,也许最著名的是谷歌地图。

在 GPS 的早期,谷歌地图使用图表上的寻路来计算到达给定目的地的最快路线。

这只是无数人使用图表解决日常问题的众多例子之一。

图数据科学中的深度优先搜索和广度优先搜索示例维基百科对Dijkstra 算法的说明

深度优先搜索 深度优先搜索,首先尽可能深入地遍历图形,然后返回起点并进行另一次深度路径遍历广度优先搜索,使其遍历尽可能靠近起始节点,并且只有在耗尽最接近它的所有可能路径时才冒险深入到图中。

寻路被用在许多用例中,也许最著名的是谷歌地图。

在 GPS 的早期,谷歌地图使用图表上的寻路来计算到达给定目的地的最快路线。

这只是无数人使用图表解决日常问题的众多例子之一。

![]()

深度优先搜索(DFS) 是一种搜索图数据结构的算法。

该算法从根节点开始,在回到起点之前尽可能地沿着每个分支进行探索。

深度优先搜索可以在Python中定义如下。

![]()

这里我们定义了一个Node类,其构造函数定义了它的子节点(连接的顶点)和名称。

addChild方法向节点添加新的子节点。

depthFirstSeach方法递归地实现了深度优先搜索算法。

class Node:

def __init__(self, name):

self.children = []

self.name = name

def addChild(self, name):

self.children.append(Node(name))

return self

def depthFirstSearch(self, array):

array.append(self.name)

for child in self.children:

child.depthFirstSearch(array)

return array

广度优先搜索

广度优先搜索(BFS)是另一种搜索图数据结构的算法。

![]()

Breadth-first search

它从根节点开始,在继续搜索其他分支的节点之前,探索目前分支的所有节点。

该算法可以用Node类的breadthFirstSearch方法定义如下。

class Node:

def __init__(self, name):

self.children = []

self.name = name

def addChild(self, name):

self.children.append(Node(name))

return self

def breadthFirstSearch(self, array):

# Write your code here.

queue = [self]

while len(queue)> 0:

current = queue.pop(0)

array.append(current.name)

for child in current.children:

queue.append(child)

return array

图算法:

令人惊讶的用例多样性解释

![]()

荐书

附文:

图算法家谱补充 肖恩·罗宾逊 (Sean Robinson),MS / 首席数据科学家作者:

Sean Robinson,MS / 首席数据科学家

![]()

图算法家谱

社区检测算法社区检测是各种图形的常见用例。

通常,它用于理解图中不同节点组为用例提供一些有形价值的任何情况。

这可以是社交网络中的任何东西,从运送货物的卡车车队到相互交易的账户网络。

但是,您选择哪种算法来发现这些社区将极大地影响它们的分组方式。

Triangle Count简单地使用了三个完全相互连接的节点(如三角形)的原理,这是图中可以存在的最简单的社区动态。

因此,它会找到图中三角形的每个组合,以确定这些节点如何组合在一起强连通分量和连通分量(又名弱连通分量)是确定图形形状的优秀算法。

两者都旨在衡量有多少图表构成了全部数据。

连通分量仅返回一组节点和边中完全断开连接的图的数量,而强连通分量返回那些通过许多链接牢固连接的子图。

正因为如此,在首次分析图形数据时,它们通常被组合用作初始探索性数据分析的一种形式Louvain Modularity通过将节点和边的集群与网络的平均值进行比较来找到社区。

如果发现一组节点通常大于图中看到的平均数,则这些节点可以被视为一个社区。

结论在本文中,作为一种图算法备忘单,我们只是触及了数据科学中最常见的图算法(又名图算法)的皮毛,这些算法可用于利用图必须为数据提供的互连功能分析。

例如,在未来的 artciels 中,我们还将更多地关注图搜索算法等。

我们研究了最基本的图论算法,它们作为更复杂的图算法的构建块,并检查了那些可以解决许多用例的各种问题的复杂算法。

无论是 Neo4j 图数据库算法还是任何其他图数据库,都是如此。

10个最难的 Python 概念

了解 Python 中 OOP、装饰器、生成器、多线程、异常处理、正则表达式、异步/等待、函数式编程、元编程和网络编程的复杂性

这些可以说是使用 Python 学习最困难的概念。

当然,对某些人来说可能困难的事情对其他人来说可能更容易。

面向对象编程 (OOP):对于初学者来说,理解类、对象、继承和多态性的概念可能很困难,因为它们可能是抽象的。

OOP 是一种强大的编程范式,允许组织和重用代码,并广泛用于许多 Python 库和框架中。

例子:创造一个狗的类:

class Dog:

def __init__(self, name, breed):

self.name = name

self.breed = breed

def bark(self):

print ("Woof!")

my_dog = Dog("Fido", "Golden Retriever")

print (my_dog.name) # "Fido"

my_dog.bark() # "Woof!":“汪!”

装饰器:

装饰器可能很难理解,因为它们涉及函数对象和闭包的操作。

装饰器是 Python 的一个强大特性,可用于为现有代码添加功能,常用于 Python 框架和库中。

例子:

def my_decorator(func):

def wrapper():

print ("调用func之前.")

func()

print ("调用func之后.")

return wrapper

@my_decorator

def say_whee():

print ("Whee!")

say_whee()

生成器表达式和 yield :

理解生成器函数和对象是处理大型数据集的一种强大且节省内存的方法,但可能很困难,因为它们涉及迭代器的使用和自定义可迭代对象的创建。

例子:生成器函数

# generator function

def my_gen ():

n = 1

print ( 'This is first printed' )

yield n

n += 1

print ( 'This is printed second' )

yield n

n += 1

print ( 'This is printed first ' )

yield n #在my_gen()中

#使用 for 循环

for item : print (item)

多线程: 多线程可能很难理解,因为它涉及同时管理多个执行线程,这可能很难协调和同步。

例子:

import threading

def worker ():

"""thread worker function"""

print (threading.get_ident())

threads = []

for i in range ( 5 ):

t = threading.Thread(target=worker)

threads.append( t)

t.start()

异常处理:

异常处理可能难以理解,因为它涉及管理和响应代码中的错误和意外情况,这可能是复杂和微妙的。

例子:

try:

x = 1 / 0

except ZeroDivisionError as e:

print ("Error Code:", e)

Case 2nd:使用raise_for_status(),当你对API的调用不成功时,引发一个异常:

import requests

response = requests.get("https://google.com"

response.raise_for_status()

print(response.text)

# <!doctype html><html itemscope=""itemtype="http://schema.org/WebPage" lang="en-IN"><head><meta content="text ...

response = requests.get("https://google.com/not-found")

response.raise_for_status()

# requests.exceptions.HTTPError:404 Client Error: Not Found for url: https://google.com/not-found

正则表达式: 正则表达式可能难以理解,因为它们涉及用于模式匹配和文本操作的专门语法和语言,这可能很复杂且难以阅读。

例子:

import re

string = "The rain in Spain"

x = re.search( "^The.*Spain$" , string )

if x:

print ( "YES! We have a match!" )

else :

print ( "No match" )

异步/等待: 异步和等待可能很难理解,因为它们涉及非阻塞 I/O 和并发的使用,这可能很难协调和同步。

例子:

import asyncio

async def my_coroutine ():

print ( "我的协程" )

await my_coroutine()

函数式编程: 函数式编程可能很难理解,因为它涉及一种不同的编程思维方式,使用不变性、一流函数和闭包等概念。

例子:

from functools import reduce

my_list = [1, 2, 3, 4, 5]

result = reduce(lambda x, y: x*y, my_list)

print (result)

元编程: 元编程可能难以理解,因为它涉及在运行时对代码的操作,这可能是复杂和抽象造成的。

例子:

class MyMeta(type ):

def __new__(cls, name, bases, dct):

x = super().__new__(cls, name, bases, dct)

x.attribute = "example"

return x

class MyClass(metaclass=MyMeta):

pass

obj = MyClass()

print (obj.attribute)

网络编程:

网络编程可能很难理解,因为它涉及使用套接字和协议在网络上进行通信,这可能是复杂和抽象的。

例子:

import socket

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.bind(("127.0.0.1", 3000))

s.listen()

Python必备的常用命令行命令

一、python环境相关命令

1、查看版本 python -V 或 python --version

2、查看安装目录 where python

二、pip命令

requirements.txt 此文件保存python安装的一些第三方库的信息,保证团队使用一样的版本号

升级pip python -m pip install --upgrade pip

1、安装第三方库 pip install <pacakage> or pip install -r requirements.txt

改换源镜像 pip install <package> -i https://pypi.tuna.tsinghua.edu.cn/simple

安装本地安装包(.whl包) pip install <目录>/<文件名> 或

pip install --use-wheel --no-index --find-links=wheelhous/<包名>

例如:pip install requests-2.21.0-py2.py3-none-any.whl

(注意.whl包在C:\Users\Administrator中才能安装)

升级包 pip install -U <包名> 或: pip install <包名> --upgrade

例如: pip install urllib3 --upgrade

2、卸载安装包 pip uninstall <包名> 或 pip uninstall -r requirements.txt

例如: pip uninstall requests

3、查看已经安装的包及版本信息 pip freeze

pip freeze > requirements.txt

4、查询已经安装了的包 pip list

查询可升级的包 pip list -o

5、显示包所在目录及信息 pip show <package>

如 pip show requests

6、搜索包 pip search <关键字>

例如: pip search requests就会显示如下和requests相关的安装包

pip install pip-search

pip_search requests

7、打包 pip wheel <包名>

例如 pip wheel requests

在以下文件夹中就能找到requests-2.21.0-py2.py3-none-any.whl文件了

Digital 时钟

from tkinter import *

from tkinter.ttk import *

from time import strftime

root = Tk()

root.title('Clock')

def time():

string = strftime('%H:%M:%S %p')

lbl.config(text = string)

lbl.after(1000, time)

lbl = Label(root, font = ('franklin gothic', 40, 'bold'),

background = 'black',

foreground = 'white')

lbl.pack(anchor = 'center')

time()

mainloop()

进度条

读取系统时间,展示今年的时间已经过去了多少个百分比

#1 读取当前时间和当年的元月一日计算天数

def time_printer():

current = datetime.datetime.now()

start = datetime.datetime(current.year, 1, 1)

daysCunt = (current - start).days

return daysCunt #start,current,

print(time_printer())

#2 导入 tqdm 模块

参数 ncols是进度条长度

desc:主题提示说明

from tqdm import tqdm

def proccessingBar():

for i in tqdm(range(0,365),ncols=100,desc="今年已经过去 % ",):

sleep(0.01)

if i == time_printer():

return

proccessingBar()