R cookbook

cookbook-r

Must Watch!

MustWatch

Installing and using packages

Problem

You want to do install and use a package.

Solution

If you are using a GUI for R, there is likely a menu-driven way of installing packages. This is how to do it from the command line:

install.packages('reshape2')

In each new R session where you use the package, you will have to load it:

library(reshape2)

If you use the package in a script, put this line in the script.

To update all your installed packages to the latest versions available:

update.packages()

If you are using R on Linux, some of the R packages may be installed at the system level by the root user, and can’t be updated this way, since you won’t haver permission to overwrite them.

Indexing into a data structure

Problem

You want to get part of a data structure.

Solution

Elements from a vector, matrix, or data frame can be extracted using numeric indexing, or by using a boolean vector of the appropriate length.

In many of the examples, below, there are multiple ways of doing the same thing.

Indexing with numbers and names

With a vector:

# A sample vector

v <- c(1,4,4,3,2,2,3)

v[c(2,3,4)]

#> [1] 4 4 3

v[2:4]

#> [1] 4 4 3

v[c(2,4,3)]

#> [1] 4 3 4

With a data frame:

# Create a sample data frame

data <- read.table(header=T, text='

subject sex size

1 M 7

2 F 6

3 F 9

4 M 11

')

# Get the element at row 1, column 3

data[1,3]

#> [1] 7

data[1,"size"]

#> [1] 7

# Get rows 1 and 2, and all columns

data[1:2, ]

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

data[c(1,2), ]

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

# Get rows 1 and 2, and only column 2

data[1:2, 2]

#> [1] M F

#> Levels: F M

data[c(1,2), 2]

#> [1] M F

#> Levels: F M

# Get rows 1 and 2, and only the columns named "sex" and "size"

data[1:2, c("sex","size")]

#> sex size

#> 1 M 7

#> 2 F 6

data[c(1,2), c(2,3)]

#> sex size

#> 1 M 7

#> 2 F 6

Indexing with a boolean vector

With the vector v from above:

v > 2

#> [1] FALSE TRUE TRUE TRUE FALSE FALSE TRUE

v[v>2]

#> [1] 4 4 3 3

v[ c(F,T,T,T,F,F,T)]

#> [1] 4 4 3 3

With the data frame from above:

# A boolean vector

data$subject < 3

#> [1] TRUE TRUE FALSE FALSE

data[data$subject < 3, ]

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

data[c(TRUE,TRUE,FALSE,FALSE), ]

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

# It is also possible to get the numeric indices of the TRUEs

which(data$subject < 3)

#> [1] 1 2

Negative indexing

Unlike in some other programming languages, when you use negative numbers for indexing in R, it doesn’t mean to index backward from the end. Instead, it means to drop the element at that index, counting the usual way, from the beginning.

# Here's the vector again.

v

#> [1] 1 4 4 3 2 2 3

# Drop the first element

v[-1]

#> [1] 4 4 3 2 2 3

# Drop first three

v[-1:-3]

#> [1] 3 2 2 3

# Drop just the last element

v[-length(v)]

#> [1] 1 4 4 3 2 2

Getting a subset of a data structure

Problem

You want to do get a subset of the elements of a vector, matrix, or data frame.

Solution

To get a subset based on some conditional criterion, the subset() function or indexing using square brackets can be used. In the examples here, both ways are shown.

# A sample vector

v <- c(1,4,4,3,2,2,3)

subset(v, v<3)

#> [1] 1 2 2

v[v<3]

#> [1] 1 2 2

# Another vector

t <- c("small", "small", "large", "medium")

# Remove "small" entries

subset(t, t!="small")

#> [1] "large" "medium"

t[t!="small"]

#> [1] "large" "medium"

One important difference between the two methods is that you can assign values to elements with square bracket indexing, but you cannot with subset().

v[v<3] <- 9

subset(v, v<3) <- 9

#> Error in subset(v, v < 3) <- 9: could not find function "subset<-"

With data frames:

# A sample data frame

data <- read.table(header=T, text='

subject sex size

1 M 7

2 F 6

3 F 9

4 M 11

')

subset(data, subject < 3)

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

data[data$subject < 3, ]

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

# Subset of particular rows and columns

subset(data, subject < 3, select = -subject)

#> sex size

#> 1 M 7

#> 2 F 6

subset(data, subject < 3, select = c(sex,size))

#> sex size

#> 1 M 7

#> 2 F 6

subset(data, subject < 3, select = sex:size)

#> sex size

#> 1 M 7

#> 2 F 6

data[data$subject < 3, c("sex","size")]

#> sex size

#> 1 M 7

#> 2 F 6

# Logical AND of two conditions

subset(data, subject < 3 & sex=="M")

#> subject sex size

#> 1 1 M 7

data[data$subject < 3 & data$sex=="M", ]

#> subject sex size

#> 1 1 M 7

# Logical OR of two conditions

subset(data, subject < 3 | sex=="M")

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

#> 4 4 M 11

data[data$subject < 3 | data$sex=="M", ]

#> subject sex size

#> 1 1 M 7

#> 2 2 F 6

#> 4 4 M 11

# Condition based on transformed data

subset(data, log2(size) > 3 )

#> subject sex size

#> 3 3 F 9

#> 4 4 M 11

data[log2(data$size) > 3, ]

#> subject sex size

#> 3 3 F 9

#> 4 4 M 11

# Subset if elements are in another vector

subset(data, subject %in% c(1,3))

#> subject sex size

#> 1 1 M 7

#> 3 3 F 9

data[data$subject %in% c(1,3), ]

#> subject sex size

#> 1 1 M 7

#> 3 3 F 9

Making a vector filled with values

Problem

You want to create a vector with values already filled in.

Solution

rep(1, 50)

# [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

# [39] 1 1 1 1 1 1 1 1 1 1 1 1

rep(F, 20)

# [1] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

# [13] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

rep(1:5, 4)

# 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5

rep(1:5, each=4)

# 1 1 1 1 2 2 2 2 3 3 3 3 4 4 4 4 5 5 5 5

# Use it on a factor

rep(factor(LETTERS[1:3]), 5)

# A B C A B C A B C A B C A B C

# Levels: A B C

Information about variables

Problem

You want to find information about variables.

Solution

Here are some sample variables to work with in the examples below:

x <- 6

n <- 1:4

let <- LETTERS[1:4]

df <- data.frame(n, let)

Information about existence

# List currently defined variables

ls()

#> [1] "df" "filename" "let" "n" "old_dir" "x"

# Check if a variable named "x" exists

exists("x")

#> [1] TRUE

# Check if "y" exists

exists("y")

#> [1] FALSE

# Delete variable x

rm(x)

x

#> Error in eval(expr, envir, enclos): object 'x' not found

Information about size/structure

# Get information about structure

str(n)

#> int [1:4] 1 2 3 4

str(df)

#> 'data.frame': 4 obs. of 2 variables:

#> $ n : int 1 2 3 4

#> $ let: Factor w/ 4 levels "A","B","C","D": 1 2 3 4

# Get the length of a vector

length(n)

#> [1] 4

# Length probably doesn't give us what we want here:

length(df)

#> [1] 2

# Number of rows

nrow(df)

#> [1] 4

# Number of columns

ncol(df)

#> [1] 2

# Get rows and columns

dim(df)

#> [1] 4 2

Working with NULL, NA, and NaN

Problem

You want to properly handle NULL, NA, or NaN values.

Solution

Sometimes your data will include NULL, NA, or NaN. These work somewhat differently from “normal” values, and may require explicit testing.

Here are some examples of comparisons with these values:

x <- NULL

x > 5

# logical(0)

y <- NA

y > 5

# NA

z <- NaN

z > 5

# NA

Here’s how to test whether a variable has one of these values:

is.null(x)

# TRUE

is.na(y)

# TRUE

is.nan(z)

# TRUE

Note that NULL is different from the other two. NULL means that there is no value, while NA and NaN mean that there is some value, although one that is perhaps not usable. Here’s an illustration of the difference:

# Is y null?

is.null(y)

# FALSE

# Is x NA?

is.na(x)

# logical(0)

# Warning message:

# In is.na(x) : is.na() applied to non-(list or vector) of type 'NULL'

In the first case, it checks if y is NULL, and the answer is no. In the second case, it tries to check if x is `NA, but there is no value to be checked.

Ignoring “bad” values in vector summary functions

If you run functions like mean() or sum() on a vector containing NA or NaN, they will return NA and NaN, which is generally unhelpful, though this will alert you to the presence of the bad value. Many of these functions take the flag na.rm, which tells them to ignore these values.

vy <- c(1, 2, 3, NA, 5)

# 1 2 3 NA 5

mean(vy)

# NA

mean(vy, na.rm=TRUE)

# 2.75

vz <- c(1, 2, 3, NaN, 5)

# 1 2 3 NaN 5

sum(vz)

# NaN

sum(vz, na.rm=TRUE)

# 11

# NULL isn't a problem, because it doesn't exist

vx <- c(1, 2, 3, NULL, 5)

# 1 2 3 5

sum(vx)

# 11

Removing bad values from a vector

These values can be removed from a vector by filtering using is.na() or is.nan().

vy

# 1 2 3 NA 5

vy[ !is.na(vy) ]

# 1 2 3 5

vz

# 1 2 3 NaN 5

vz[ !is.nan(vz) ]

# 1 2 3 5

Notes

There are also the infinite numerical values Inf and -Inf, and the associated functions is.finite() and is.infinite().

Generating random numbers

Problem

You want to generate random numbers.

Solution

For uniformly distributed (flat) random numbers, use runif(). By default, its range is from 0 to 1.

runif(1)

#> [1] 0.09006613

# Get a vector of 4 numbers

runif(4)

#> [1] 0.6972299 0.9505426 0.8297167 0.9779939

# Get a vector of 3 numbers from 0 to 100

runif(3, min=0, max=100)

#> [1] 83.702278 3.062253 5.388360

# Get 3 integers from 0 to 100

# Use max=101 because it will never actually equal 101

floor(runif(3, min=0, max=101))

#> [1] 11 67 1

# This will do the same thing

sample(1:100, 3, replace=TRUE)

#> [1] 8 63 64

# To generate integers WITHOUT replacement:

sample(1:100, 3, replace=FALSE)

#> [1] 76 25 52

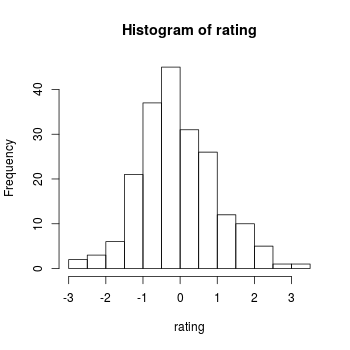

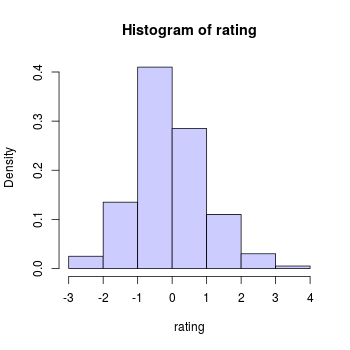

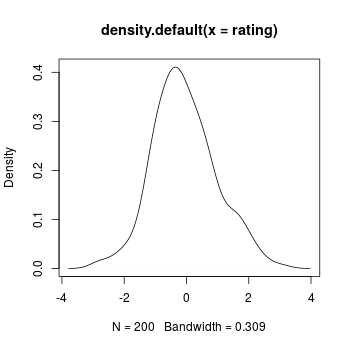

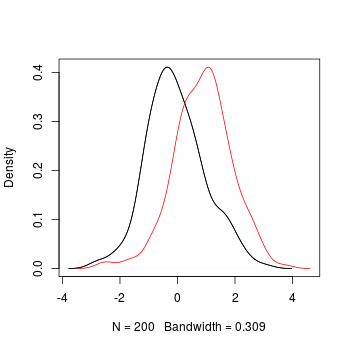

To generate numbers from a normal distribution, use rnorm(). By default the mean is 0 and the standard deviation is 1.

rnorm(4)

#> [1] -2.3308287 -0.9073857 -0.7638332 -0.2193786

# Use a different mean and standard deviation

rnorm(4, mean=50, sd=10)

#> [1] 59.20927 40.12440 44.58840 41.97056

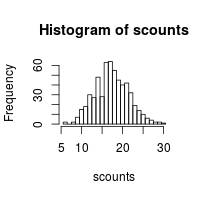

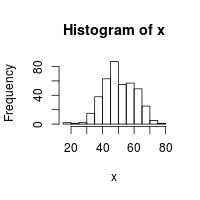

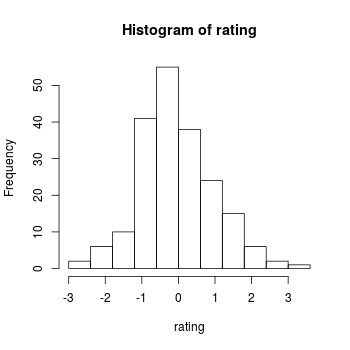

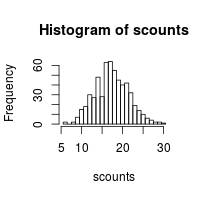

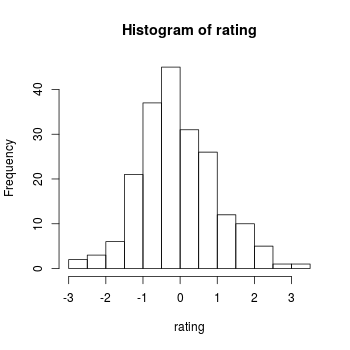

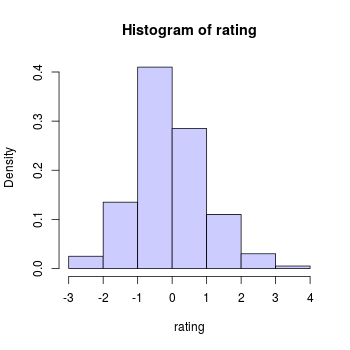

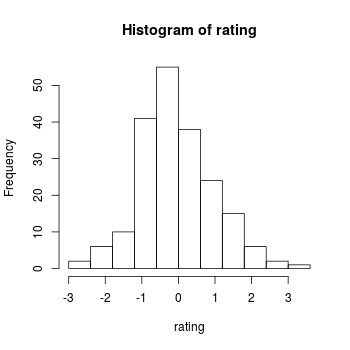

# To check that the distribution looks right, make a histogram of the numbers

x <- rnorm(400, mean=50, sd=10)

hist(x)

Generating repeatable sequences of random numbers

Problem

You want to generate a sequence of random numbers, and then generate that same sequence again later.

Solution

Use set.seed(), and pass in a number as the seed.

set.seed(423)

runif(3)

#> [1] 0.1089715 0.5973455 0.9726307

set.seed(423)

runif(3)

#> [1] 0.1089715 0.5973455 0.9726307

Saving the state of the random number generator

Problem

You want to save and restore the state of the random number generator

Solution

Save .Random.seed to another variable and then later restore that value to .Random.seed.

# For this example, set the random seed

set.seed(423)

runif(3)

#> [1] 0.1089715 0.5973455 0.9726307

# Save the seed

oldseed <- .Random.seed

runif(3)

#> [1] 0.7973768 0.2278427 0.5189830

# Do some other stuff with RNG here, such as:

# runif(30)

# ...

# Restore the seed

.Random.seed <- oldseed

# Get the same random numbers as before, after saving the seed

runif(3)

#> [1] 0.7973768 0.2278427 0.5189830

If no random number generator has been used in your R session, the variable .Random.seed will not exist. If you cannot be certain that an RNG has been used before attempting to save, the seed, you should check for it before saving and restoring:

oldseed <- NULL

if (exists(".Random.seed"))

oldseed <- .Random.seed

# Do some other stuff with RNG here, such as:

# runif(30)

# ...

if (!is.null(oldseed))

.Random.seed <- oldseed

Saving and restoring the state of the RNG in functions

If you attempt to restore the state of the random number generator within a function by using .Random.seed <- x, it will not work, because this operation changes a local variable named .Random.seed, instead of the variable in the global envrionment.

Here are two examples. What these functions are supposed to do is generate some random numbers, while leaving the state of the RNG unchanged.

# This is the bad version

bad_rand_restore <- function() {

if (exists(".Random.seed"))

oldseed <- .Random.seed

else

oldseed <- NULL

print(runif(3))

if (!is.null(oldseed))

.Random.seed <- oldseed

else

rm(".Random.seed")

}

# This is the good version

rand_restore <- function() {

if (exists(".Random.seed", .GlobalEnv))

oldseed <- .GlobalEnv$.Random.seed

else

oldseed <- NULL

print(runif(3))

if (!is.null(oldseed))

.GlobalEnv$.Random.seed <- oldseed

else

rm(".Random.seed", envir = .GlobalEnv)

}

# The bad version doesn't properly reset the RNG state, so random numbers keep

# changing.

set.seed(423)

bad_rand_restore()

#> [1] 0.1089715 0.5973455 0.9726307

bad_rand_restore()

#> [1] 0.7973768 0.2278427 0.5189830

bad_rand_restore()

#> [1] 0.6929255 0.8104453 0.1019465

# The good version correctly resets the RNG state each time, so random numbers

# stay the same.

set.seed(423)

rand_restore()

#> [1] 0.1089715 0.5973455 0.9726307

rand_restore()

#> [1] 0.1089715 0.5973455 0.9726307

rand_restore()

#> [1] 0.1089715 0.5973455 0.9726307

Notes

.Random.seed should not be modified by the user.

Rounding numbers

Problem

You want to round numbers.

Solution

There are many ways of rounding: to the nearest integer, up, down, or toward zero.

x <- seq(-2.5, 2.5, by=.5)

# Round to nearest, with .5 values rounded to even number.

round(x)

#> [1] -2 -2 -2 -1 0 0 0 1 2 2 2

# Round up

ceiling(x)

#> [1] -2 -2 -1 -1 0 0 1 1 2 2 3

# Round down

floor(x)

#> [1] -3 -2 -2 -1 -1 0 0 1 1 2 2

# Round toward zero

trunc(x)

#> [1] -2 -2 -1 -1 0 0 0 1 1 2 2

It is also possible to round to other decimal places:

x <- c(.001, .07, 1.2, 44.02, 738, 9927)

# Round to one decimal place

round(x, digits=1)

#> [1] 0.0 0.1 1.2 44.0 738.0 9927.0

# Round to tens place

round(x, digits=-1)

#> [1] 0 0 0 40 740 9930

# Round to nearest 5

round(x/5)*5

#> [1] 0 0 0 45 740 9925

# Round to nearest .02

round(x/.02)*.02

#> [1] 0.00 0.08 1.20 44.02 738.00 9927.00

Comparing floating point numbers

Problem

Comparing floating point numbers does not always work as you expect. For example:

0.3 == 3*.1

#> [1] FALSE

(0.1 + 0.1 + 0.1) - 0.3

#> [1] 5.551115e-17

x <- seq(0, 1, by=.1)

x

#> [1] 0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0

10*x - round(10*x)

#> [1] 0.000000e+00 0.000000e+00 0.000000e+00 4.440892e-16 0.000000e+00 0.000000e+00

#> [7] 8.881784e-16 8.881784e-16 0.000000e+00 0.000000e+00 0.000000e+00

Solution

There is no universal solution, because this issue is inherent to the storage format for non-integer (floating point) numbers, in R and computers in general.

See this page for more information and workarounds:

http://www.mathworks.com/support/tech-notes/1100/1108.html. This is written for Matlab, but much of it will be relevant for R as well.

Creating strings from variables

Problem

You want to do create a string from variables.

Solution

The two common ways of creating strings from variables are the paste function and the sprintf function. paste is more useful for vectors, and sprintf is more useful for precise control of the output.

Using paste()

a <- "apple"

b <- "banana"

# Put a and b together, with a space in between:

paste(a, b)

#> [1] "apple banana"

# With no space, use sep="", or use paste0():

paste(a, b, sep="")

#> [1] "applebanana"

paste0(a, b)

#> [1] "applebanana"

# With a comma and space:

paste(a, b, sep=", ")

#> [1] "apple, banana"

# With a vector

d <- c("fig", "grapefruit", "honeydew")

# If the input is a vector, use collapse to put the elements together:

paste(d, collapse=", ")

#> [1] "fig, grapefruit, honeydew"

# If the input is a scalar and a vector, it puts the scalar with each

# element of the vector, and returns a vector:

paste(a, d)

#> [1] "apple fig" "apple grapefruit" "apple honeydew"

# Use sep and collapse:

paste(a, d, sep="-", collapse=", ")

#> [1] "apple-fig, apple-grapefruit, apple-honeydew"

Using sprintf()

Another way is to use sprintf function. This is derived from the function of the same name in the C programming language.

To substitute in a string or string variable, use %s:

a <- "string"

sprintf("This is where a %s goes.", a)

#> [1] "This is where a string goes."

For integers, use %d or a variant:

x <- 8

sprintf("Regular:%d", x)

#> [1] "Regular:8"

# Can print to take some number of characters, leading with spaces.

sprintf("Leading spaces:%4d", x)

#> [1] "Leading spaces: 8"

# Can also lead with zeros instead.

sprintf("Leading zeros:%04d", x)

#> [1] "Leading zeros:0008"

For floating-point numbers, use %f for standard notation, and %e or %E for exponential notation. You can also use %g or %G for a “smart” formatter that automatically switches between the two formats, depending on where the significant digits are. The following examples are taken from the R help page for sprintf:

sprintf("%f", pi) # "3.141593"

sprintf("%.3f", pi) # "3.142"

sprintf("%1.0f", pi) # "3"

sprintf("%5.1f", pi) # " 3.1"

sprintf("%05.1f", pi) # "003.1"

sprintf("%+f", pi) # "+3.141593"

sprintf("% f", pi) # " 3.141593"

sprintf("%-10f", pi) # "3.141593 " (left justified)

sprintf("%e", pi) #"3.141593e+00"

sprintf("%E", pi) # "3.141593E+00"

sprintf("%g", pi) # "3.14159"

sprintf("%g", 1e6 * pi) # "3.14159e+06" (exponential)

sprintf("%.9g", 1e6 * pi) # "3141592.65" ("fixed")

sprintf("%G", 1e-6 * pi) # "3.14159E-06"

In the %m.nf format specification: The m represents the field width, which is the minimum number of characters in the output string, and can be padded with leading spaces, or zeros if there is a zero in front of m. The n represents precision, which the number of digits after the decimal.

Other miscellaneous things:

x <- "string"

sprintf("Substitute in multiple strings: %s %s", x, "string2")

#> [1] "Substitute in multiple strings: string string2"

# To print a percent sign, use "%%"

sprintf("A single percent sign here %%")

#> [1] "A single percent sign here %"

Creating a formula from a string

Problem

You want to create a formula from a string.

Solution

It can be useful to create a formula from a string. This often occurs in functions where the formula arguments are passed in as strings.

In the most basic case, use as.formula():

# This returns a string:

"y ~ x1 + x2"

#> [1] "y ~ x1 + x2"

# This returns a formula:

as.formula("y ~ x1 + x2")

#> y ~ x1 + x2

#> <environment: 0x3361710>

Here is an example of how it might be used:

# These are the variable names:

measurevar <- "y"

groupvars <- c("x1","x2","x3")

# This creates the appropriate string:

paste(measurevar, paste(groupvars, collapse=" + "), sep=" ~ ")

#> [1] "y ~ x1 + x2 + x3"

# This returns the formula:

as.formula(paste(measurevar, paste(groupvars, collapse=" + "), sep=" ~ "))

#> y ~ x1 + x2 + x3

#> <environment: 0x3361710>

Extracting components from a formula

Problem

You want to extract parts of a formula for further use.

Solution

You can index into the formula object as though it were a list, using the [[ operator.

f <- y ~ x1 + x2

# Take a look at f

str(f)

#> Class 'formula' language y ~ x1 + x2

#> ..- attr(*, ".Environment")=<environment: 0x1e46710>

# Get each part

f[[1]]

#> `~`

f[[2]]

#> y

f[[3]]

#> x1 + x2

# Or view the whole thing as a list

as.list(f)

#> [[1]]

#> `~`

#>

#> [[2]]

#> y

#>

#> [[3]]

#> x1 + x2

#>

#> <environment: 0x1e46710>

For formulas that have nothing on the left side, there are only two elements:

f2 <- ~ x1 + x2

as.list(f2)

#> [[1]]

#> `~`

#>

#> [[2]]

#> x1 + x2

#>

#> <environment: 0x1e46710>

Each of the elements of the formula is an symbol or language object (which consists of multiple symbols:

str(f[[1]])

#> symbol ~

str(f[[2]])

#> symbol y

str(f[[3]])

#> language x1 + x2

# Look at parts of the langage object

str(f[[3]][[1]])

#> symbol +

str(f[[3]][[2]])

#> symbol x1

str(f[[3]][[3]])

#> symbol x2

You can use as.character() or deparse() to convert any of these to strings. deparse() can give a more natural-looking result:

as.character(f[[1]])

#> [1] "~"

as.character(f[[2]])

#> [1] "y"

# The language object gets coerced into a string that represents the parse tree:

as.character(f[[3]])

#> [1] "+" "x1" "x2"

# You can use deparse() to get a more natural looking string

deparse(f[[3]])

#> [1] "x1 + x2"

deparse(f)

#> [1] "y ~ x1 + x2"

The formula object also captures the environment in which it was called, as we saw earlier when we ran str(f). To extract it, use environment():

environment(f)

#> <environment: 0x1e46710>

Loading data from a file

Problem

You want to load data from a file.

Solution

Delimited text files

The simplest way to import data is to save it as a text file with delimiters such as tabs or commas (CSV).

data <- read.csv("datafile.csv")

# Load a CSV file that doesn't have headers

data <- read.csv("datafile-noheader.csv", header=FALSE)

The function read.table() is a more general function which allows you to set the delimiter, whether or not there are headers, whether strings are set off with quotes, and more. See ?read.table for more information on the details.

data <- read.table("datafile-noheader.csv",

header=FALSE,

sep="," # use "\t" for tab-delimited files

)

Loading a file with a file chooser

On some platforms, using file.choose() will open a file chooser dialog window. On others, it will simply prompt the user to type in a filename.

data <- read.csv(file.choose())

Treating strings as factors or characters

By default, strings in the data are converted to factors. If you load the data below with read.csv, then all the text columns will be treated as factors, even though it might make more sense to treat some of them as strings. To do

this, use stringsAsFactors=FALSE:

data <- read.csv("datafile.csv", stringsAsFactors=FALSE)

# You might have to convert some columns to factors

data$Sex <- factor(data$Sex)

Another alternative is to load them as factors and convert some columns to

characters:

data <- read.csv("datafile.csv")

data$First <- as.character(data$First)

data$Last <- as.character(data$Last)

# Another method: convert columns named "First" and "Last"

stringcols <- c("First","Last")

data[stringcols] <- lapply(data[stringcols], as.character)

Loading a file from the Internet

Data can also be loaded from a URL. These (very long) URLs will load the files linked to below.

data <- read.csv("http://www.cookbook-r.com/Data_input_and_output/Loading_data_from_a_file/datafile.csv")

# Read in a CSV file without headers

data <- read.csv("http://www.cookbook-r.com/Data_input_and_output/Loading_data_from_a_file/datafile-noheader.csv", header=FALSE)

# Manually assign the header names

names(data) <- c("First","Last","Sex","Number")

The data files used above:

datafile.csv:

"First","Last","Sex","Number"

"Currer","Bell","F",2

"Dr.","Seuss","M",49

"","Student",NA,21

datafile-noheader.csv:

"Currer","Bell","F",2

"Dr.","Seuss","M",49

"","Student",NA,21

Fixed-width text files

Suppose your data has fixed-width columns, like this:

First Last Sex Number

Currer Bell F 2

Dr. Seuss M 49

"" Student NA 21

One way to read it in is to simply use read.table() with strip.white=TRUE, which will remove extra spaces.

read.table("clipboard", header=TRUE, strip.white=TRUE)

However, your data file may have columns containing spaces, or columns with no spaces separating them, like this, where the scores column represents six different measurements, each from 0 to 3.

subject sex scores

N 1 M 113311

NE 2 F 112231

S 3 F 111221

W 4 M 011002

In this case, you may need to use the read.fwf() function. If you read the column names from the file, it requires that they be separated with a delimiter like a single tab, space, or comma. If they are separated with multiple spaces, as in this example, you will have to assign the column names directly.

# Assign the column names manually

read.fwf("myfile.txt",

c(7,5,-2,1,1,1,1,1,1), # Width of the columns. -2 means drop those columns

skip=1, # Skip the first line (contains header here)

col.names=c("subject","sex","s1","s2","s3","s4","s5","s6"),

strip.white=TRUE) # Strip out leading and trailing whitespace when reading each

#> subject sex s1 s2 s3 s4 s5 s6

#> 1 N 1 M 1 1 3 3 1 1

#> 2 NE 2 F 1 1 2 2 3 1

#> 3 S 3 F 1 1 1 2 2 1

#> 4 W 4 M 0 1 1 0 0 2

# subject sex s1 s2 s3 s4 s5 s6

# N 1 M 1 1 3 3 1 1

# NE 2 F 1 1 2 2 3 1

# S 3 F 1 1 1 2 2 1

# W 4 M 0 1 1 0 0 2

# If the first row looked like this:

# subject,sex,scores

# Then we could use header=TRUE:

read.fwf("myfile.txt", c(7,5,-2,1,1,1,1,1,1), header=TRUE, strip.white=TRUE)

#> Error in read.table(file = FILE, header = header, sep = sep, row.names = row.names, : more columns than column names

Excel files

The read.xls function in the gdata package can read in Excel files.

library(gdata)

data <- read.xls("data.xls")

See http://cran.r-project.org/doc/manuals/R-data.html#Reading-Excel-spreadsheets.

SPSS data files

The read.spss function in the foreign package can read in SPSS files.

library(foreign)

data <- read.spss("data.sav", to.data.frame=TRUE)

Loading and storing data with the keyboard and clipboard

Problem

You want to enter data using input from the keyboard (not a file).

Solution

Data input

Suppose this is your data:

size weight cost

small 5 6

medium 8 10

large 11 9

Loading data from keyboard input or clipboard

One way enter from the keyboard is to read from standard input (stdin()).

# Cutting and pasting using read.table and stdin()

data <- read.table(stdin(), header=TRUE)

# You will be prompted for input; copy and paste text here

# Or:

# data <- read.csv(stdin())

You can also load directly from the clipboard:

# First copy the data to the clipboard

data <- read.table('clipboard', header=TRUE)

# Or:

# data <- read.csv('clipboard')

Loading data in a script

The previous method can’t be used to load data in a script file because the input must be typed (or pasted) after running the command.

# Using read.table()

data <- read.table(header=TRUE, text='

size weight cost

small 5 6

medium 8 10

large 11 9

')

For different data formats (e.g., comma-delimited, no headers, etc.), options to read.table() can be set. See ../Loading data from a file for more information.

Data output

By default, R prints row names. If you want to print the table in a format that can be copied and pasted, it may be useful to suppress them.

print(data, row.names=FALSE)

#> size weight cost

#> small 5 6

#> medium 8 10

#> large 11 9

Writing data for copying and pasting, or to the clipboard

It is possible to write delimited data to terminal (stdout()), so that it can be copied and pasted elsewhere. Or it can be written directly to the clipboard.

write.csv(data, stdout(), row.names=FALSE)

# "size","weight","cost"

# "small",5,6

# "medium",8,10

# "large",11,9

# Write to the clipboard (does not work on Mac or Unix)

write.csv(data, 'clipboard', row.names=FALSE)

Output for loading in R

If the data has already been loaded into R, the data structure can be saved using dput(). The output from dput() is a command which will recreate the data structure. The advantage of this method is that it will keep any modifications to data types; for example, if one column consists of numbers and you have converted it to a factor, this method will preserve that type, whereas simply loading the text table (as shown above) will treat it as numeric.

# Suppose you have already loaded data

dput(data)

#> structure(list(size = structure(c(3L, 2L, 1L), .Label = c("large",

#> "medium", "small"), class = "factor"), weight = c(5L, 8L, 11L

#> ), cost = c(6L, 10L, 9L)), .Names = c("size", "weight", "cost"

#> ), class = "data.frame", row.names = c(NA, -3L))

# Later, we can use the output from dput to recreate the data structure

newdata <- structure(list(size = structure(c(3L, 2L, 1L), .Label = c("large",

"medium", "small"), class = "factor"), weight = c(5L, 8L, 11L

), cost = c(6L, 10L, 9L)), .Names = c("size", "weight", "cost"

), class = "data.frame", row.names = c(NA, -3L))

Running a script

Problem

You want to run R code from a text file.

Solution

Use the source() function.

# First, go to the proper directory

setwd('/home/username/desktop/rcode')

source('analyze.r')

Note that if you want your script to produce text output, you must use the print() or cat() function.

x <- 1:10

# In a script, this will do nothing

x

# Use the print function:

print(x)

#> [1] 1 2 3 4 5 6 7 8 9 10

# Simpler output: no row/column numbers, no text wrapping

cat(x)

#> 1 2 3 4 5 6 7 8 9 10

Another alternative is to run source() with the print.eval=TRUE option.

Writing data to a file

Problem

You want to write data to a file.

Solution

Writing to a delimited text file

The easiest way to do this is to use write.csv(). By default, write.csv() includes row names, but these are usually unnecessary and may cause confusion.

# A sample data frame

data <- read.table(header=TRUE, text='

subject sex size

1 M 7

2 F NA

3 F 9

4 M 11

')

# Write to a file, suppress row names

write.csv(data, "data.csv", row.names=FALSE)

# Same, except that instead of "NA", output blank cells

write.csv(data, "data.csv", row.names=FALSE, na="")

# Use tabs, suppress row names and column names

write.table(data, "data.csv", sep="\t", row.names=FALSE, col.names=FALSE)

Saving in R data format

write.csv() and write.table() are best for interoperability with other data analysis programs. They will not, however, preserve special attributes of the data structures, such as whether a column is a character type or factor, or the order of levels in factors. In order to do that, it should be written out in a special format for R.

Below are are three primary ways of doing this:

The first method is to output R source code which, when run, will re-create the object. This should work for most data objects, but it may not be able to faithfully re-create some more complicated data objects.

# Save in a text format that can be easily loaded in R

dump("data", "data.Rdmpd")

# Can save multiple objects:

dump(c("data", "data1"), "data.Rdmpd")

# To load the data again:

source("data.Rdmpd")

# When loaded, the original data names will automatically be used.

The next method is to write out individual data objects in RDS format. This format can be binary or ASCII. Binary is more compact, while ASCII will be more efficient with version control systems like Git.

# Save a single object in binary RDS format

saveRDS(data, "data.rds")

# Or, using ASCII format

saveRDS(data, "data.rds", ascii=TRUE)

# To load the data again:

data <- readRDS("data.rds")

It’s also possible to save multiple objects into an single file, using the RData format.

# Saving multiple objects in binary RData format

save(data, file="data.RData")

# Or, using ASCII format

save(data, file="data.RData", ascii=TRUE)

# Can save multiple objects

save(data, data1, file="data.RData")

# To load the data again:

load("data.RData")

An important difference between saveRDS() and save() is that, with the former, when you readRDS() the data, you specify the name of the object, and with the latter, when you load() the data, the original object names are automatically used. Automatically using the original object names can sometimes simplify a workflow, but it can also be a drawback if the data object is meant to be distributed to others for use in a different environment.

Writing text and output from analyses to a file

Problem

You want to write output to a file.

Solution

The sink() function will redirect output to a file instead of to the R terminal. Note that if you use sink() in a script and it crashes before output is returned to the terminal, then you will not see any response to your commands. Call sink() without any arguments to return output to the terminal.

# Start writing to an output file

sink('analysis-output.txt')

set.seed(12345)

x <-rnorm(10,10,1)

y <-rnorm(10,11,1)

# Do some stuff here

cat(sprintf("x has %d elements:\n", length(x)))

print(x)

cat("y =", y, "\n")

cat("=============================\n")

cat("T-test between x and y\n")

cat("=============================\n")

t.test(x,y)

# Stop writing to the file

sink()

# Append to the file

sink('analysis-output.txt', append=TRUE)

cat("Some more stuff here...\n")

sink()

The contents of the output file:

x has 10 elements:

[1] 10.585529 10.709466 9.890697 9.546503 10.605887 8.182044 10.630099 9.723816

[9] 9.715840 9.080678

y = 10.88375 12.81731 11.37063 11.52022 10.24947 11.8169 10.11364 10.66842 12.12071 11.29872

=============================

T-test between x and y

=============================

Welch Two Sample t-test

data: x and y

t = -3.8326, df = 17.979, p-value = 0.001222

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-2.196802 -0.641042

sample estimates:

mean of x mean of y

9.867056 11.285978

Some more stuff here...

Sorting

Problem

You want to sort a vector, matrix, or data frame.

Solution

Vectors

# Make up a randomly ordered vector

v <- sample(101:110)

# Sort the vector

sort(v)

#> [1] 101 102 103 104 105 106 107 108 109 110

# Reverse sort

sort(v, decreasing=TRUE)

#> [1] 110 109 108 107 106 105 104 103 102 101

Data frames

To sort a data frame on one or more columns, you can use the arrange function from plyr package, or use R’s built-in functions. The arrange function is much easier to use, but does require the external package to be installed.

# Make a data frame

df <- data.frame (id=1:4,

weight=c(20,27,24,22),

size=c("small", "large", "medium", "large"))

df

library(plyr)

# Sort by weight column. These have the same result.

arrange(df, weight) # Use arrange from plyr package

df[ order(df$weight), ] # Use built-in R functions

#> id weight size

#> 1 1 20 small

#> 2 4 22 large

#> 3 3 24 medium

#> 4 2 27 large

# Sort by size, then by weight

arrange(df, size, weight) # Use arrange from plyr package

df[ order(df$size, df$weight), ] # Use built-in R functions

#> id weight size

#> 4 4 22 large

#> 2 2 27 large

#> 3 3 24 medium

#> 1 1 20 small

# Sort by all columns in the data frame, from left to right

df[ do.call(order, as.list(df)), ]

# In this particular example, the order will be unchanged

Note that the size column is a factor and is sorted by the order of the factor levels. In this case, the levels were automatically assigned alphabetically (when creating the data frame), so large is first and small is last.

Reverse sort

The overall order of the sort can be reversed with the argument decreasing=TRUE.

To reverse the direction of a particular column, the method depends on the data type:

- Numbers: put a

- in front of the variable name, e.g. df[ order(-df$weight), ].

- Factors: convert to integer and put a

- in front of the variable name, e.g. df[ order(-xtfrm(df$size)), ].

- Characters: there isn’t a simple way to do this. One method is to convert to a factor first and then sort as above.

# Reverse sort by weight column. These all have the same effect:

arrange(df, -weight) # Use arrange from plyr package

df[ order(df$weight, decreasing=TRUE), ] # Use built-in R functions

df[ order(-df$weight), ] # Use built-in R functions

#> id weight size

#> 2 2 27 large

#> 3 3 24 medium

#> 4 4 22 large

#> 1 1 20 small

# Sort by size (increasing), then by weight (decreasing)

arrange(df, size, -weight) # Use arrange from plyr package

df[ order(df$size, -df$weight), ] # Use built-in R functions

#> id weight size

#> 2 2 27 large

#> 4 4 22 large

#> 3 3 24 medium

#> 1 1 20 small

# Sort by size (decreasing), then by weight (increasing)

# The call to xtfrm() is needed for factors

arrange(df, -xtfrm(size), weight) # Use arrange from plyr package

df[ order(-xtfrm(df$size), df$weight), ] # Use built-in R functions

#> id weight size

#> 1 1 20 small

#> 3 3 24 medium

#> 4 4 22 large

#> 2 2 27 large

Randomizing order

Problem

You want to randomize the order of a data structure.

Solution

# Create a vector

v <- 11:20

# Randomize the order of the vector

v <- sample(v)

# Create a data frame

data <- data.frame(label=letters[1:5], number=11:15)

data

#> label number

#> 1 a 11

#> 2 b 12

#> 3 c 13

#> 4 d 14

#> 5 e 15

# Randomize the order of the data frame

data <- data[sample(1:nrow(data)), ]

data

#> label number

#> 5 e 15

#> 2 b 12

#> 4 d 14

#> 3 c 13

#> 1 a 11

Notes

To make a randomization repeatable, you should set the seed for the random number generator.

Converting between vector types

Problem

You want to convert between numeric vectors, character vectors, and factors.

Solution

Suppose you start with this numeric vector n:

n <- 10:14

n

#> [1] 10 11 12 13 14

To convert the numeric vector to the other two types (we’ll also save these results in c and f):

# Numeric to Character

c <- as.character(n)

# Numeric to Factor

f <- factor(n)

# 10 11 12 13 14

To convert the character vector to the other two:

# Character to Numeric

as.numeric(c)

#> [1] 10 11 12 13 14

# Character to Factor

factor(c)

#> [1] 10 11 12 13 14

#> Levels: 10 11 12 13 14

Converting a factor to a character vector is straightforward:

# Factor to Character

as.character(f)

#> [1] "10" "11" "12" "13" "14"

However, converting a factor to a numeric vector is a little trickier. If you just convert it with as.numeric, it will give you the numeric coding of the factor, which probably isn’t what you want.

as.numeric(f)

#> [1] 1 2 3 4 5

# Another way to get the numeric coding, if that's what you want:

unclass(f)

#> [1] 1 2 3 4 5

#> attr(,"levels")

#> [1] "10" "11" "12" "13" "14"

The way to get the text values converted to numbers is to first convert it to a character, then a numeric vector.

# Factor to Numeric

as.numeric(as.character(f))

#> [1] 10 11 12 13 14

Finding and removing duplicate records

Problem

You want to find and/or remove duplicate entries from a vector or data frame.

Solution

With vectors:

# Generate a vector

set.seed(158)

x <- round(rnorm(20, 10, 5))

x

#> [1] 14 11 8 4 12 5 10 10 3 3 11 6 0 16 8 10 8 5 6 6

# For each element: is this one a duplicate (first instance of a particular value

# not counted)

duplicated(x)

#> [1] FALSE FALSE FALSE FALSE FALSE FALSE FALSE TRUE FALSE TRUE TRUE FALSE FALSE FALSE

#> [15] TRUE TRUE TRUE TRUE TRUE TRUE

# The values of the duplicated entries

# Note that '6' appears in the original vector three times, and so it has two

# entries here.

x[duplicated(x)]

#> [1] 10 3 11 8 10 8 5 6 6

# Duplicated entries, without repeats

unique(x[duplicated(x)])

#> [1] 10 3 11 8 5 6

# The original vector with all duplicates removed. These do the same:

unique(x)

#> [1] 14 11 8 4 12 5 10 3 6 0 16

x[!duplicated(x)]

#> [1] 14 11 8 4 12 5 10 3 6 0 16

With data frames:

# A sample data frame:

df <- read.table(header=TRUE, text='

label value

A 4

B 3

C 6

B 3

B 1

A 2

A 4

A 4

')

# Is each row a repeat?

duplicated(df)

#> [1] FALSE FALSE FALSE TRUE FALSE FALSE TRUE TRUE

# Show the repeat entries

df[duplicated(df),]

#> label value

#> 4 B 3

#> 7 A 4

#> 8 A 4

# Show unique repeat entries (row names may differ, but values are the same)

unique(df[duplicated(df),])

#> label value

#> 4 B 3

#> 7 A 4

# Original data with repeats removed. These do the same:

unique(df)

#> label value

#> 1 A 4

#> 2 B 3

#> 3 C 6

#> 5 B 1

#> 6 A 2

df[!duplicated(df),]

#> label value

#> 1 A 4

#> 2 B 3

#> 3 C 6

#> 5 B 1

#> 6 A 2

Comparing vectors or factors with NA

Problem

You want to compare two vectors or factors but want comparisons with NA’s to be reported as TRUE or FALSE (instead of NA).

Solution

Suppose you have this data frame with two columns which consist of boolean vectors:

df <- data.frame( a=c(TRUE,TRUE,TRUE,FALSE,FALSE,FALSE,NA,NA,NA),

b=c(TRUE,FALSE,NA,TRUE,FALSE,NA,TRUE,FALSE,NA))

df

#> a b

#> 1 TRUE TRUE

#> 2 TRUE FALSE

#> 3 TRUE NA

#> 4 FALSE TRUE

#> 5 FALSE FALSE

#> 6 FALSE NA

#> 7 NA TRUE

#> 8 NA FALSE

#> 9 NA NA

Normally, when you compare two vectors or factors containing NA values, the vector of results will have NAs where either of the original items was NA. Depending on your purposes, this may or not be desirable.

df$a == df$b

#> [1] TRUE FALSE NA FALSE TRUE NA NA NA NA

# The same comparison, but presented as another column in the data frame:

data.frame(df, isSame = (df$a==df$b))

#> a b isSame

#> 1 TRUE TRUE TRUE

#> 2 TRUE FALSE FALSE

#> 3 TRUE NA NA

#> 4 FALSE TRUE FALSE

#> 5 FALSE FALSE TRUE

#> 6 FALSE NA NA

#> 7 NA TRUE NA

#> 8 NA FALSE NA

#> 9 NA NA NA

A function for comparing with NA’s

This comparison function will essentially treat NA’s as just another value. If an item in both vectors is NA, then it reports TRUE for that item; if the item is NA in just one vector, it reports FALSE; all other comparisons (between non-NA items) behaves the same.

# This function returns TRUE wherever elements are the same, including NA's,

# and FALSE everywhere else.

compareNA <- function(v1,v2) {

same <- (v1 == v2) | (is.na(v1) & is.na(v2))

same[is.na(same)] <- FALSE

return(same)

}

Examples of the function in use

Comparing boolean vectors:

compareNA(df$a, df$b)

#> [1] TRUE FALSE FALSE FALSE TRUE FALSE FALSE FALSE TRUE

# Same comparison, presented as another column

data.frame(df, isSame = compareNA(df$a,df$b))

#> a b isSame

#> 1 TRUE TRUE TRUE

#> 2 TRUE FALSE FALSE

#> 3 TRUE NA FALSE

#> 4 FALSE TRUE FALSE

#> 5 FALSE FALSE TRUE

#> 6 FALSE NA FALSE

#> 7 NA TRUE FALSE

#> 8 NA FALSE FALSE

#> 9 NA NA TRUE

It also works with factors, even if the levels of the factors are in different orders:

# Create sample data frame with factors.

df1 <- data.frame(a = factor(c('x','x','x','y','y','y', NA, NA, NA)),

b = factor(c('x','y', NA,'x','y', NA,'x','y', NA)))

# Do the comparison

data.frame(df1, isSame = compareNA(df1$a, df1$b))

#> a b isSame

#> 1 x x TRUE

#> 2 x y FALSE

#> 3 x <NA> FALSE

#> 4 y x FALSE

#> 5 y y TRUE

#> 6 y <NA> FALSE

#> 7 <NA> x FALSE

#> 8 <NA> y FALSE

#> 9 <NA> <NA> TRUE

# It still works if the factor levels are arranged in a different order

df1$b <- factor(df1$b, levels=c('y','x'))

data.frame(df1, isSame = compareNA(df1$a, df1$b))

#> a b isSame

#> 1 x x TRUE

#> 2 x y FALSE

#> 3 x <NA> FALSE

#> 4 y x FALSE

#> 5 y y TRUE

#> 6 y <NA> FALSE

#> 7 <NA> x FALSE

#> 8 <NA> y FALSE

#> 9 <NA> <NA> TRUE

Recoding data

Problem

You want to recode data or calculate new data columns from existing ones.

Solution

The examples below will use this data:

data <- read.table(header=T, text='

subject sex control cond1 cond2

1 M 7.9 12.3 10.7

2 F 6.3 10.6 11.1

3 F 9.5 13.1 13.8

4 M 11.5 13.4 12.9

')

Recoding a categorical variable

The easiest way is to use revalue() or mapvalues() from the plyr package.

This will code M as 1 and F as 2, and put it in a new column.

Note that these functions preserves the type: if the input is a factor, the output will be a factor; and if the input is a character vector, the output will be a character vector.

library(plyr)

# The following two lines are equivalent:

data$scode <- revalue(data$sex, c("M"="1", "F"="2"))

data$scode <- mapvalues(data$sex, from = c("M", "F"), to = c("1", "2"))

data

#> subject sex control cond1 cond2 scode

#> 1 1 M 7.9 12.3 10.7 1

#> 2 2 F 6.3 10.6 11.1 2

#> 3 3 F 9.5 13.1 13.8 2

#> 4 4 M 11.5 13.4 12.9 1

# data$sex is a factor, so data$scode is also a factor

See ../Mapping vector values and ../Renaming levels of a factor for more information about these functions.

If you don’t want to rely on plyr, you can do the following with R’s built-in functions:

data$scode[data$sex=="M"] <- "1"

data$scode[data$sex=="F"] <- "2"

# Convert the column to a factor

data$scode <- factor(data$scode)

data

#> subject sex control cond1 cond2 scode

#> 1 1 M 7.9 12.3 10.7 1

#> 2 2 F 6.3 10.6 11.1 2

#> 3 3 F 9.5 13.1 13.8 2

#> 4 4 M 11.5 13.4 12.9 1

Another way to do it is to use the match function:

oldvalues <- c("M", "F")

newvalues <- factor(c("g1","g2")) # Make this a factor

data$scode <- newvalues[ match(data$sex, oldvalues) ]

data

#> subject sex control cond1 cond2 scode

#> 1 1 M 7.9 12.3 10.7 g1

#> 2 2 F 6.3 10.6 11.1 g2

#> 3 3 F 9.5 13.1 13.8 g2

#> 4 4 M 11.5 13.4 12.9 g1

Recoding a continuous variable into categorical variable

Mark those whose control measurement is <7 as “low”, and those with >=7 as “high”:

data$category[data$control< 7] <- "low"

data$category[data$control>=7] <- "high"

# Convert the column to a factor

data$category <- factor(data$category)

data

#> subject sex control cond1 cond2 scode category

#> 1 1 M 7.9 12.3 10.7 g1 high

#> 2 2 F 6.3 10.6 11.1 g2 low

#> 3 3 F 9.5 13.1 13.8 g2 high

#> 4 4 M 11.5 13.4 12.9 g1 high

With the cut function, you specify boundaries and the resulting values:

data$category <- cut(data$control,

breaks=c(-Inf, 7, 9, Inf),

labels=c("low","medium","high"))

data

#> subject sex control cond1 cond2 scode category

#> 1 1 M 7.9 12.3 10.7 g1 medium

#> 2 2 F 6.3 10.6 11.1 g2 low

#> 3 3 F 9.5 13.1 13.8 g2 high

#> 4 4 M 11.5 13.4 12.9 g1 high

By default, the ranges are open on the left, and closed on the right, as in (7,9]. To set it so that ranges are closed on the left and open on the right, like [7,9), use right=FALSE.

Calculating a new continuous variable

Suppose you want to add a new column with the sum of the three measurements.

data$total <- data$control + data$cond1 + data$cond2

data

#> subject sex control cond1 cond2 scode category total

#> 1 1 M 7.9 12.3 10.7 g1 medium 30.9

#> 2 2 F 6.3 10.6 11.1 g2 low 28.0

#> 3 3 F 9.5 13.1 13.8 g2 high 36.4

#> 4 4 M 11.5 13.4 12.9 g1 high 37.8

Mapping vector values

Problem

You want to change all instances of value x to value y in a vector.

Solution

# Create some example data

str <- c("alpha", "beta", "gamma")

num <- c(1, 2, 3)

The easiest way is to use revalue() or mapvalues() from the plyr package:

library(plyr)

revalue(str, c("beta"="two", "gamma"="three"))

#> [1] "alpha" "two" "three"

mapvalues(str, from = c("beta", "gamma"), to = c("two", "three"))

#> [1] "alpha" "two" "three"

# For numeric vectors, revalue() won't work, since it uses a named vector, and

# the names are always strings, not numbers. mapvalues() will work, though:

mapvalues(num, from = c(2, 3), to = c(5, 6))

#> [1] 1 5 6

If you don’t want to rely on plyr, you can do the following with R’s built-in functions.

Note that these methods will modify the vectors directly; that is, you don’t have to save the result back into the variable.

# Rename by name: change "beta" to "two"

str[str=="beta"] <- "two"

str

#> [1] "alpha" "two" "gamma"

num[num==2] <- 5

num

#> [1] 1 5 3

It’s also possible to use R’s string search-and-replace functions to remap values in character vectors.

Note that the ^ and $ surrounding alpha are there to ensure that the entire string matches.

Without them, if there were a value named alphabet, it would also match, and the replacement would be onebet.

str <- c("alpha", "beta", "gamma")

sub("^alpha$", "one", str)

#> [1] "one" "beta" "gamma"

# Across all columns, replace all instances of "a" with "X"

gsub("a", "X", str)

#> [1] "XlphX" "betX" "gXmmX"

# gsub() replaces all instances of the pattern in each element

# sub() replaces only the first instance in each element

See also:

Changing the name of factor levels works much the same. See ../Renaming levels of a factor for more information.

Renaming levels of a factor

Problem

You want to rename the levels in a factor.

Solution

# A sample factor to work with.

x <- factor(c("alpha","beta","gamma","alpha","beta"))

x

#> [1] alpha beta gamma alpha beta

#> Levels: alpha beta gamma

levels(x)

#> [1] "alpha" "beta" "gamma"

The easiest way is to use revalue() or mapvalues() from the plyr package:

library(plyr)

revalue(x, c("beta"="two", "gamma"="three"))

#> [1] alpha two three alpha two

#> Levels: alpha two three

mapvalues(x, from = c("beta", "gamma"), to = c("two", "three"))

#> [1] alpha two three alpha two

#> Levels: alpha two three

If you don’t want to rely on plyr, you can do the following with R’s built-in functions.

Note that these methods will modify x directly; that is, you don’t have to save the result back into x.

# Rename by name: change "beta" to "two"

levels(x)[levels(x)=="beta"] <- "two"

# You can also rename by position, but this is a bit dangerous if your data

# can change in the future. If there is a change in the number or positions of

# factor levels, then this can result in wrong data.

# Rename by index in levels list: change third item, "gamma", to "three".

levels(x)[3] <- "three"

x

#> [1] alpha two three alpha two

#> Levels: alpha two three

# Rename all levels

levels(x) <- c("one","two","three")

x

#> [1] one two three one two

#> Levels: one two three

It’s possible to rename factor levels by name (without plyr), but keep in mind that this works only if ALL levels are present in the list; if any are not in the list, they will be replaced with NA.

# Rename all levels, by name

x <- factor(c("alpha","beta","gamma","alpha","beta"))

levels(x) <- list(A="alpha", B="beta", C="gamma")

x

#> [1] A B C A B

#> Levels: A B C

It’s also possible to use R’s string search-and-replace functions to rename factor levels. Note that the ^ and $ surrounding alpha are there to ensure that the entire string matches. Without them, if there were a level named alphabet, it would also match, and the replacement would be onebet.

# A sample factor to work with.

x <- factor(c("alpha","beta","gamma","alpha","beta"))

x

#> [1] alpha beta gamma alpha beta

#> Levels: alpha beta gamma

levels(x) <- sub("^alpha$", "one", levels(x))

x

#> [1] one beta gamma one beta

#> Levels: one beta gamma

# Across all columns, replace all instances of "a" with "X"

levels(x) <- gsub("a", "X", levels(x))

x

#> [1] one betX gXmmX one betX

#> Levels: one betX gXmmX

# gsub() replaces all instances of the pattern in each factor level.

# sub() replaces only the first instance in each factor level.

See also:

Mapping values in a vector to new values works much the same. See ../Mapping vector values for more information.

Re-computing the levels of factor

Problem

You want to do re-compute the levels of a factor. This is useful when a factor contains levels that aren’t actually present in the data. This can happen during data import, or when you remove some rows.

Solution

For a single factor object:

# Create a factor with an extra level (gamma)

x <- factor(c("alpha","beta","alpha"), levels=c("alpha","beta","gamma"))

x

#> [1] alpha beta alpha

#> Levels: alpha beta gamma

# Remove the extra level

x <- factor(x)

x

#> [1] alpha beta alpha

#> Levels: alpha beta

After importing data, you may have a data frame with a mix of factors and other kinds vectors, and want to re-compute the levels of all the factors. You can use the droplevels() function to do this.

# Create a data frame with some factors (with extra levels)

df <- data.frame(

x = factor(c("alpha","beta","alpha"), levels=c("alpha","beta","gamma")),

y = c(5,8,2),

z = factor(c("red","green","green"), levels=c("red","green","blue"))

)

# Display the factors (with extra levels)

df$x

#> [1] alpha beta alpha

#> Levels: alpha beta gamma

df$z

#> [1] red green green

#> Levels: red green blue

# Drop the extra levels

df <- droplevels(df)

# Show the factors again, now without extra levels

df$x

#> [1] alpha beta alpha

#> Levels: alpha beta

df$z

#> [1] red green green

#> Levels: red green

See also:

To re-compute the levels of all the factor columns in a data frame, see ../Re-computing_the_levels_of_all_factor_columns_in_a_data_frame.

Changing the order of levels of a factor

Problem

You want to change the order in which the levels of a factor appear.

Solution

Factors in R come in two varieties: ordered and unordered, e.g., {small, medium, large} and {pen, brush, pencil}. For most analyses, it will not matter whether a factor is ordered or unordered. If the factor is ordered, then the specific order of the levels matters (small < medium < large). If the factor is unordered, then the levels will still appear in some order, but the specific order of the levels matters only for convenience (pen, pencil, brush) – it will determine, for example, how output will be printed, or the arrangement of items on a graph.

One way to change the level order is to use factor() on the factor and specify the order directly. In this example, the function ordered() could be used instead of factor().

Here’s the sample data:

# Create a factor with the wrong order of levels

sizes <- factor(c("small", "large", "large", "small", "medium"))

sizes

#> [1] small large large small medium

#> Levels: large medium small

The levels can be specified explicitly:

sizes <- factor(sizes, levels = c("small", "medium", "large"))

sizes

#> [1] small large large small medium

#> Levels: small medium large

We can do the same with an ordered factor:

sizes <- ordered(c("small", "large", "large", "small", "medium"))

sizes <- ordered(sizes, levels = c("small", "medium", "large"))

sizes

#> [1] small large large small medium

#> Levels: small < medium < large

Another way to change the order is to use relevel() to make a particular level first in the list. (This will not work for ordered factors.)

# Create a factor with the wrong order of levels

sizes <- factor(c("small", "large", "large", "small", "medium"))

sizes

#> [1] small large large small medium

#> Levels: large medium small

# Make medium first

sizes <- relevel(sizes, "medium")

sizes

#> [1] small large large small medium

#> Levels: medium large small

# Make small first

sizes <- relevel(sizes, "small")

sizes

#> [1] small large large small medium

#> Levels: small medium large

You can also specify the proper order when the factor is created.

sizes <- factor(c("small", "large", "large", "small", "medium"),

levels = c("small", "medium", "large"))

sizes

#> [1] small large large small medium

#> Levels: small medium large

To reverse the order of levels in a factor:

# Create a factor with the wrong order of levels

sizes <- factor(c("small", "large", "large", "small", "medium"))

sizes

#> [1] small large large small medium

#> Levels: large medium small

sizes <- factor(sizes, levels=rev(levels(sizes)))

sizes

#> [1] small large large small medium

#> Levels: small medium large

Renaming columns in a data frame

Problem

You want to rename the columns in a data frame.

Solution

Start with a sample data frame with three columns:

d <- data.frame(alpha=1:3, beta=4:6, gamma=7:9)

d

#> alpha beta gamma

#> 1 1 4 7

#> 2 2 5 8

#> 3 3 6 9

names(d)

#> [1] "alpha" "beta" "gamma"

The simplest way is to use rename() from the plyr package:

library(plyr)

rename(d, c("beta"="two", "gamma"="three"))

#> alpha two three

#> 1 1 4 7

#> 2 2 5 8

#> 3 3 6 9

If you don’t want to rely on plyr, you can do the following with R’s built-in functions. Note that these modify d directly; that is, you don’t have to save the result back into d.

# Rename column by name: change "beta" to "two"

names(d)[names(d)=="beta"] <- "two"

d

#> alpha two gamma

#> 1 1 4 7

#> 2 2 5 8

#> 3 3 6 9

# You can also rename by position, but this is a bit dangerous if your data

# can change in the future. If there is a change in the number or positions of

# columns, then this can result in wrong data.

# Rename by index in names vector: change third item, "gamma", to "three"

names(d)[3] <- "three"

d

#> alpha two three

#> 1 1 4 7

#> 2 2 5 8

#> 3 3 6 9

It’s also possible to use R’s string search-and-replace functions to rename columns. Note that the ^ and $ surrounding alpha are there to ensure that the entire string matches. Without them, if there were a column named alphabet, it would also match, and the replacement would be onebet.

names(d) <- sub("^alpha$", "one", names(d))

d

#> one two three

#> 1 1 4 7

#> 2 2 5 8

#> 3 3 6 9

# Across all columns, replace all instances of "t" with "X"

names(d) <- gsub("t", "X", names(d))

d

#> one Xwo Xhree

#> 1 1 4 7

#> 2 2 5 8

#> 3 3 6 9

# gsub() replaces all instances of the pattern in each column name.

# sub() replaces only the first instance in each column name.

Adding and removing columns from a data frame

Problem

You want to add or remove columns from a data frame.

Solution

There are many different ways of adding and removing columns from a data frame.

data <- read.table(header=TRUE, text='

id weight

1 20

2 27

3 24

')

# Ways to add a column

data$size <- c("small", "large", "medium")

data[["size"]] <- c("small", "large", "medium")

data[,"size"] <- c("small", "large", "medium")

data$size <- 0 # Use the same value (0) for all rows

# Ways to remove the column

data$size <- NULL

data[["size"]] <- NULL

data[,"size"] <- NULL

data[[3]] <- NULL

data[,3] <- NULL

data <- subset(data, select=-size)

Reordering the columns in a data frame

Problem

You want to do reorder the columns in a data frame.

Solution

# A sample data frame

data <- read.table(header=TRUE, text='

id weight size

1 20 small

2 27 large

3 24 medium

')

# Reorder by column number

data[c(1,3,2)]

#> id size weight

#> 1 1 small 20

#> 2 2 large 27

#> 3 3 medium 24

# To actually change `data`, you need to save it back into `data`:

# data <- data[c(1,3,2)]

# Reorder by column name

data[c("size", "id", "weight")]

#> size id weight

#> 1 small 1 20

#> 2 large 2 27

#> 3 medium 3 24

The above examples index into the data frame by treating it as a list (a data frame is essentially a list of vectors). You can also use matrix-style indexing, as in data[row, col], where row is left blank.

data[, c(1,3,2)]

#> id size weight

#> 1 1 small 20

#> 2 2 large 27

#> 3 3 medium 24

The drawback to matrix indexing is that it gives different results when you specify just one column. In these cases, the returned object is a vector, not a data frame. Because the returned data type isn’t always consistent with matrix indexing, it’s generally safer to use list-style indexing, or the drop=FALSE option:

# List-style indexing of one column

data[2]

#> weight

#> 1 20

#> 2 27

#> 3 24

# Matrix-style indexing of one column - drops dimension to become a vector

data[,2]

#> [1] 20 27 24

# Matrix-style indexing with drop=FALSE - preserves dimension to remain data frame

data[, 2, drop=FALSE]

#> weight

#> 1 20

#> 2 27

#> 3 24

Merging data frames

Problem

You want to merge two data frames on a given column from each (like a join in SQL).

Solution

# Make a data frame mapping story numbers to titles

stories <- read.table(header=TRUE, text='

storyid title

1 lions

2 tigers

3 bears

')

# Make another data frame with the data and story numbers (no titles)

data <- read.table(header=TRUE, text='

subject storyid rating

1 1 6.7

1 2 4.5

1 3 3.7

2 2 3.3

2 3 4.1

2 1 5.2

')

# Merge the two data frames

merge(stories, data, "storyid")

#> storyid title subject rating

#> 1 1 lions 1 6.7

#> 2 1 lions 2 5.2

#> 3 2 tigers 1 4.5

#> 4 2 tigers 2 3.3

#> 5 3 bears 1 3.7

#> 6 3 bears 2 4.1

If the two data frames have different names for the columns you want to match on, the names can be specified:

# In this case, the column is named 'id' instead of storyid

stories2 <- read.table(header=TRUE, text='

id title

1 lions

2 tigers

3 bears

')

# Merge on stories2$id and data$storyid.

merge(x=stories2, y=data, by.x="id", by.y="storyid")

#> id title subject rating

#> 1 1 lions 1 6.7

#> 2 1 lions 2 5.2

#> 3 2 tigers 1 4.5

#> 4 2 tigers 2 3.3

#> 5 3 bears 1 3.7

#> 6 3 bears 2 4.1

# Note that the column name is inherited from the first data frame (x=stories2).

It is possible to merge on multiple columns:

# Make up more data

animals <- read.table(header=T, text='

size type name

small cat lynx

big cat tiger

small dog chihuahua

big dog "great dane"

')

observations <- read.table(header=T, text='

number size type

1 big cat

2 small dog

3 small dog

4 big dog

')

merge(observations, animals, c("size","type"))

#> size type number name

#> 1 big cat 1 tiger

#> 2 big dog 4 great dane

#> 3 small dog 2 chihuahua

#> 4 small dog 3 chihuahua

Notes

After merging, it may be useful to change the order of the columns.

Comparing data frames

Problem

You want to do compare two or more data frames and find rows that appear in more than one data frame, or rows that appear only in one data frame.

Solution

An example

Suppose you have the following three data frames, and you want to know whether each row from each data frame appears in at least one of the other data frames.

dfA <- data.frame(Subject=c(1,1,2,2), Response=c("X","X","X","X"))

dfA

#> Subject Response

#> 1 1 X

#> 2 1 X

#> 3 2 X

#> 4 2 X

dfB <- data.frame(Subject=c(1,2,3), Response=c("X","Y","X"))

dfB

#> Subject Response

#> 1 1 X

#> 2 2 Y

#> 3 3 X

dfC <- data.frame(Subject=c(1,2,3), Response=c("Z","Y","Z"))

dfC

#> Subject Response

#> 1 1 Z

#> 2 2 Y

#> 3 3 Z

In dfA, the rows containing (1,X) also appear in dfB, but the rows containing (2,X) do not appear in any of the other data frames. Similarly, dfB contains (1,X) which appears in dfA, and (2,Y), which appears in dfC, but (3,X) does not appear in any other data frame.

You might wish to mark the rows which are duplicated in another data frame, or which are unique to each data frame.

Joining the data frames

To proceed, first join the three data frames with a column identifying which source each row came from. It’s called Coder here because this could be data coded by three different people. In this case, you might wish to find where the coders agreed, or where they disagreed.

dfA$Coder <- "A"

dfB$Coder <- "B"

dfC$Coder <- "C"

df <- rbind(dfA, dfB, dfC) # Stick them together

df <- df[,c("Coder", "Subject", "Response")] # Reorder the columns to look nice

df

#> Coder Subject Response

#> 1 A 1 X

#> 2 A 1 X

#> 3 A 2 X

#> 4 A 2 X

#> 5 B 1 X

#> 6 B 2 Y

#> 7 B 3 X

#> 8 C 1 Z

#> 9 C 2 Y

#> 10 C 3 Z

If your data starts out in this format, then there’s obviously no need to join it together.

Finding duplicated rows

Using the function dupsBetweenGroups (defined below), we can find which rows are duplicated between different groups:

# Find the rows which have duplicates in a different group.

dupRows <- dupsBetweenGroups(df, "Coder")

# Print it alongside the data frame

cbind(df, dup=dupRows)

#> Coder Subject Response dup

#> 1 A 1 X TRUE

#> 2 A 1 X TRUE

#> 3 A 2 X FALSE

#> 4 A 2 X FALSE

#> 5 B 1 X TRUE

#> 6 B 2 Y TRUE

#> 7 B 3 X FALSE

#> 8 C 1 Z FALSE

#> 9 C 2 Y TRUE

#> 10 C 3 Z FALSE

Note that this does not mark duplicated rows within a group. With Coder=A, there are two rows with Subject=1 and Response=X, but these are not marked as duplicates.

Finding unique rows

It’s also possible to find the rows that are unique within each group:

cbind(df, unique=!dupRows)

#> Coder Subject Response unique

#> 1 A 1 X FALSE

#> 2 A 1 X FALSE

#> 3 A 2 X TRUE

#> 4 A 2 X TRUE

#> 5 B 1 X FALSE

#> 6 B 2 Y FALSE

#> 7 B 3 X TRUE

#> 8 C 1 Z TRUE

#> 9 C 2 Y FALSE

#> 10 C 3 Z TRUE

Splitting apart the data frame

If you wish to split the joined data frame into the three original data frames

# Store the results in df

dfDup <- cbind(df, dup=dupRows)

dfA <- subset(dfDup, Coder=="A", select=-Coder)

dfA

#> Subject Response dup

#> 1 1 X TRUE

#> 2 1 X TRUE

#> 3 2 X FALSE

#> 4 2 X FALSE

dfB <- subset(dfDup, Coder=="B", select=-Coder)

dfB

#> Subject Response dup

#> 5 1 X TRUE

#> 6 2 Y TRUE

#> 7 3 X FALSE

dfC <- subset(dfDup, Coder=="C", select=-Coder)

dfC

#> Subject Response dup

#> 8 1 Z FALSE

#> 9 2 Y TRUE

#> 10 3 Z FALSE

Ignoring columns

It is also possible to ignore one or more columns, by removing that column from the data frame that is passed to the function. The results can be joined to the original complete data frame if desired.

# Ignore the Subject column -- only use Response

dfNoSub <- subset(df, select=-Subject)

dfNoSub

#> Coder Response

#> 1 A X

#> 2 A X

#> 3 A X

#> 4 A X

#> 5 B X

#> 6 B Y

#> 7 B X

#> 8 C Z

#> 9 C Y

#> 10 C Z

# Check for duplicates

dupRows <- dupsBetweenGroups(dfNoSub, "Coder")

# Join the result to the original data frame

cbind(df, dup=dupRows)

#> Coder Subject Response dup

#> 1 A 1 X TRUE

#> 2 A 1 X TRUE

#> 3 A 2 X TRUE

#> 4 A 2 X TRUE

#> 5 B 1 X TRUE

#> 6 B 2 Y TRUE

#> 7 B 3 X TRUE

#> 8 C 1 Z FALSE

#> 9 C 2 Y TRUE

#> 10 C 3 Z FALSE

dupsBetweenGroups function

This is the function that does all the work:

dupsBetweenGroups <- function (df, idcol) {

# df: the data frame

# idcol: the column which identifies the group each row belongs to

# Get the data columns to use for finding matches

datacols <- setdiff(names(df), idcol)

# Sort by idcol, then datacols. Save order so we can undo the sorting later.

sortorder <- do.call(order, df)

df <- df[sortorder,]

# Find duplicates within each id group (first copy not marked)

dupWithin <- duplicated(df)

# With duplicates within each group filtered out, find duplicates between groups.

# Need to scan up and down with duplicated() because first copy is not marked.

dupBetween = rep(NA, nrow(df))

dupBetween[!dupWithin] <- duplicated(df[!dupWithin,datacols])

dupBetween[!dupWithin] <- duplicated(df[!dupWithin,datacols], fromLast=TRUE) | dupBetween[!dupWithin]

# ============= Replace NA's with previous non-NA value ==============

# This is why we sorted earlier - it was necessary to do this part efficiently

# Get indexes of non-NA's

goodIdx <- !is.na(dupBetween)

# These are the non-NA values from x only

# Add a leading NA for later use when we index into this vector

goodVals <- c(NA, dupBetween[goodIdx])

# Fill the indices of the output vector with the indices pulled from

# these offsets of goodVals. Add 1 to avoid indexing to zero.

fillIdx <- cumsum(goodIdx)+1

# The original vector, now with gaps filled

dupBetween <- goodVals[fillIdx]

# Undo the original sort

dupBetween[sortorder] <- dupBetween

# Return the vector of which entries are duplicated across groups

return(dupBetween)

}

Re-computing the levels of all factor columns in a data frame

Problem

You want to re-compute factor levels of all factor columns in a data frame.

Solution

Sometimes after reading in data and cleaning it, you will end up with factor columns that have levels that should no longer be there.

For example, d below has one blank row. When it’s read in, the factor columns have a level "", which shouldn’t be part of the data.

d <- read.csv(header = TRUE, text='

x,y,value

a,one,1

,,5

b,two,4

c,three,10

')

d

#> x y value

#> 1 a one 1

#> 2 5

#> 3 b two 4

#> 4 c three 10

str(d)

#> 'data.frame': 4 obs. of 3 variables:

#> $ x : Factor w/ 4 levels "","a","b","c": 2 1 3 4

#> $ y : Factor w/ 4 levels "","one","three",..: 2 1 4 3

#> $ value: int 1 5 4 10

Even after removing the empty row, the factors still have the blank string "" as a level:

# Remove second row

d <- d[-2,]

d

#> x y value

#> 1 a one 1

#> 3 b two 4

#> 4 c three 10

str(d)

#> 'data.frame': 3 obs. of 3 variables:

#> $ x : Factor w/ 4 levels "","a","b","c": 2 3 4

#> $ y : Factor w/ 4 levels "","one","three",..: 2 4 3

#> $ value: int 1 4 10

With droplevels

The simplest way is to use the droplevels() function:

d1 <- droplevels(d)

str(d1)

#> 'data.frame': 3 obs. of 3 variables:

#> $ x : Factor w/ 3 levels "a","b","c": 1 2 3

#> $ y : Factor w/ 3 levels "one","three",..: 1 3 2

#> $ value: int 1 4 10

With vapply and lapply

To re-compute the levels for all factor columns, we can use vapply() with is.factor() to find out which of columns are factors, and then use that information with lapply to apply the factor() function to those columns.

# Find which columns are factors

factor_cols <- vapply(d, is.factor, logical(1))

# Apply the factor() function to those columns, and assign then back into d

d[factor_cols] <- lapply(d[factor_cols], factor)

str(d)

#> 'data.frame': 3 obs. of 3 variables:

#> $ x : Factor w/ 3 levels "a","b","c": 1 2 3

#> $ y : Factor w/ 3 levels "one","three",..: 1 3 2

#> $ value: int 1 4 10

See also:

For information about re-computing the levels of a factor, see ../Re-computing_the_levels_of_factor.

Converting data between wide and long format

Problem

You want to do convert data from a wide format to a long format.

Many functions in R expect data to be in a long format rather than a wide format. Programs like SPSS, however, often use wide-formatted data.

Solution

There are two sets of methods that are explained below:

gather() and spread() from the tidyr package. This is a newer interface to the reshape2 package.melt() and dcast() from the reshape2 package.

There are a number of other methods which aren’t covered here, since they are not as easy to use:

- The

reshape() function, which is confusingly not part of the reshape2 package; it is part of the base install of R.

stack() and unstack()

Sample data

These data frames hold the same data, but in wide and long formats. They will each be converted to the other format below.

olddata_wide <- read.table(header=TRUE, text='

subject sex control cond1 cond2

1 M 7.9 12.3 10.7

2 F 6.3 10.6 11.1

3 F 9.5 13.1 13.8

4 M 11.5 13.4 12.9

')

# Make sure the subject column is a factor

olddata_wide$subject <- factor(olddata_wide$subject)

olddata_long <- read.table(header=TRUE, text='

subject sex condition measurement

1 M control 7.9

1 M cond1 12.3

1 M cond2 10.7